Migrating my Full Text Indexer to .NET Core (supporting multi-target NuGet packages)

So it looks increasingly like .NET Core is going to be an important technology in the near future, in part because Microsoft is developing much of it in the open (in a significant break from their past approach to software), in part because some popular projects support it (Dapper, AutoMapper, Json.NET) and in part because of excitement from blog posts such as ASP.NET Core – 2300% More Requests Served Per Second.

All I really knew about it was that it was a cut-down version of the .NET framework which should be able to run on platforms other than Windows, which might be faster in some cases and which may still undergo some important changes in the near future (such as moving away from the new "project.json" project files and back to something more traditional in terms of Visual Studio projects - see The Future of project.json in ASP.NET Core).

To try to find out more, I've taken a codebase that I wrote years ago and have migrated it to .NET Core. It's not enormous but it spans multiple projects, has a (small-but-better-than-nothing) test suite and supports serialising search indexes to and from disk for caching purposes. My hope was that I would be able to probe some of the limitations of .NET Core with this non-trivial project but that it wouldn't be such a large task that it take more than a few sessions spaced over a few days to complete.

Spoilers

Would I be able to migrate one project at a time within the solution to .NET Core and still have the whole project building successfully (while some of the other projects were still targeting the "full fat" .NET framework)? Yes, but some hacks are required.

Would it be easy (or even possible) to create a NuGet package that would work on both .NET Core and .NET 4.5? Yes.

Would the functionality that is no longer present in .NET Core cause me problems? Largely, no. The restricted reflection capabilities, no - if I pull in an extra dependency. The restricted serialisation facilities, yes (but I'm fairly happy with the solution and compromises that I ended up with).

What, really, is .NET Core (and what is the Full Text Indexer)?

Essentially,

.NET Core is a new cross-platform .NET Product .. [and] is composed of the following parts: A .NET runtime .. A set of framework libraries .. A set of SDK tools and language compilers .. The 'dotnet' app host, which is used to launch .NET Core apps

(from Scott Hanselman's ".NET Core 1.0 is now released!.NET Core 1.0 is now released!" post)

What .NET Core means in the context of this migration is that there are new project types in Visual Studio to use that target new .NET frameworks. Instead of .NET 4.6.1, for example, there is "netstandard1.6" for class libraries and "netcoreapp1.0" for console applications.

The new Visual Studio project types become available after you install the Visual Studio Tooling - alternatively, the "dotnet" command line tool makes things very easy so you can could create projects using nothing more than notepad and "dotnet" if you want to! Since I was just getting started, I chose to stick in my Visual Studio comfort zone.

The Full Text Indexer code that I'm migrating was something that I wrote a few years ago while I was working with a Lucene integration ("this full text indexing lark.. how hard could it really be!"). It's a set of class libraries; "Common" (which has no dependencies other than the .NET framework), "Core" (which depends upon Common), "Helpers" (which depends upon both Common and Core), and "Querier" (which also depends upon Common and Core). Then there is a "UnitTests" project and a "Tester" console application, which loads some data from a Sqlite database file, constructs an index and then performs a search or two (just to demonstrate how it works end-to-end).

My plan was to try migrating one project at a time over to .NET Core, to move in baby steps so that I could be confident that everything would remain in a working state for most of the time.

Creating the first .NET Core project

The first thing I did was delete the "Common" project entirely (deleted it from Visual Studio and then manually deleted all of the files) and then created a brand new .NET Core class library called "Common". I then used my source control client to revert the deletions of the class files so that they appeared within the new project's folder structure. I expected to then have to "Show All Files" and explicitly include these files in the project but it turns out that .NET Core project files don't specify files to include, it's presumed that all files in the folder will be included. Makes sense!

It wouldn't compile, though, because some of the classes have the [Serializable] attribute on them and this doesn't exist in .NET Core. As I understand it, that's because the framework's serialisation mechanisms have been stripped right back with the intention of the framework being able to specialise at framework-y core competencies and for there to be an increased reliance on external libraries for other functionality.

This attribute is used through my library because there is an IndexDataSerialiser that allows an index to be persisted to disk for caching purposes. It uses the BinaryFormatter to do this, which requires that the types that you need to be serialised be decorated with the [Serializable] attribute or they implement the ISerializable interface. Neither the BinaryFormatter nor the ISerializable interface are available within .NET Core. I will need to decide what to do about this later - ideally, I'd like to continue to be able to support reading and writing to the same format as I have done before (if only to see if it's possible when migrating to Core). For now, though, I'll just remove the [Serializable] attributes and worry about it later.

So, with very little work, the Common project was compiling for the "netstandard1.6" target framework.

Unfortunately, the projects that rely on Common weren't compiling because their references to it were removed when I removed the project from the VS solution. And, if I try to add references to the new Common project I'm greeted with this:

A reference to 'Common' could not be added. An assembly must have a 'dll' or 'exe' extension in order to be referenced.

The problem is that Common is being built for "netstandard1.6" but only that framework. I also want it to support a "full fat" .NET framework, like 4.5.2 - in order to do this I need to edit the project.json file so that the build process creates multiple versions of the project, one .NET 4.5.2 as well as the one for netstandard. That means changing it from this:

{

"version": "1.0.0-*",

"dependencies": {

"NETStandard.Library": "1.6.0"

},

"frameworks": {

"netstandard1.6": {

"imports": "dnxcore50"

}

}

}

to this:

{

"version": "1.0.0-*",

"dependencies": {},

"frameworks": {

"netstandard1.6": {

"imports": "dnxcore50",

"dependencies": {

"NETStandard.Library": "1.6.0"

}

},

"net452": {}

}

}

Two things have happened - an additional entry has been added to the "frameworks" section ("net452" joins "netstandard1.6") and the "NETStandard.Library" dependency has moved from being something that is always required by the project to something that is only required by the project when it's being built for netstandard.

Now, Common may be added as a reference to the other projects.

However.. they won't compile. Visual Studio will be full of errors that required classes do not exist in the current context.

Adding a reference to a .NET Core project from a .NET 4.5.2 project in the same solution

Although the project.json configuration does mean that two version of the Common library are being produced (looking in the bin/Debug folder, there are two sub-folders "net452" and "netstandard1.6" and each have their own binaries in), it seems that the "Add Reference" functionality in Visual Studio doesn't (currently) support adding references. There is an issue on GitHub about this; Allow "Add Reference" to .NET Core class library that uses .NET Framework from a traditional class library but it seems like the conclusion is that this will be fixed in the future, when the changes have been completed that move away from .NET Core projects having a project.json file and towards a new kind of ".csproj" file.

There is a workaround, though. Instead of selecting the project from the Add Reference dialog, you click "Browse" and then select that file in the "Common/bin/Debug/net452" folder. Then the project will build. This isn't a perfect solution, though, since it will always reference the Debug build. When building in Release configuration, you also want the referenced binaries from other projects to be built in Release configuration. To do that, I had to open each ".csproj" file notepad and change

<Reference Include="Common">

<HintPath>..\Common\bin\Debug\net452\Common.dll</HintPath>

</Reference>

to

<Reference Include="Common">

<HintPath>..\Common\bin\$(Configuration)\net452\Common.dll</HintPath>

</Reference>

A little bit annoying but not the end of the world (credit for this fix, btw, goes to this Stack Overflow answer to Attach unit tests to ASP.NET Core project).

What makes it even more annoying is the link from the referencing project (say, the Core project) to the referenced project (the Common project) is not as tightly integrated as when a project reference is normally added through Visual Studio. For example, while you can set breakpoints on the Common project and they will be hit when the Core project calls into that code, using "Go To Definition" to navigate from code in the Core project into code in the referenced Common project doesn't work (it takes you to a "from metadata" view rather than taking you to the actual file). On top of this, the referencing project doesn't know that it needs to be rebuilt if the referenced project is rebuilt - so, if the Common library is changed and rebuilt then the Core library may continue to work against an old version of the Common binary unless you explicitly rebuild Core as well.

These are frustrations that I would not want to live with long term. However, the plan here is to migrate all of the projects over to .NET Core and so I think that I can put up with these limitations so long as they only affect me as I migrate the projects over one-by-one.

The second project (additional dependencies required)

I repeated the procedure for second project; "Core". This also contained files with types marked as [Serializable] (which I just removed for now) and there was the IndexDataSerialiser class that used the BinaryFormatter to allow data to be persisted to disk - this also had to go, since there was no support for it in .NET Core (I'll talk about what I did with serialisation later on). I needed to add a reference to the Common project - thankfully adding a reference to a .NET Core project from a .NET Core project works perfectly, so the workaround that I had to apply before (when the Core project was still .NET 4.5.2) wasn't necessary.

However, it still didn't compile.

In the "Core" project lives the EnglishPluralityStringNormaliser class, which

is used to adjust tokens (ie. words) so that the singular and plural versions of the same word are considered equivalent (eg. "cat" and "cats", "category" and "categories"). Internally, it generates a compiled LINQ expression to try to perform its work as efficiently as possible and it requires reflection to do that. Calling "GetMethod" and "GetProperty" on a Type is not supported in netstandard, though, and an additional dependency is required. So the Core project.json file needed to be changed to look like this:

{

"version": "1.0.0-*",

"dependencies": {

"Common": "1.0.0-*"

},

"frameworks": {

"netstandard1.6": {

"imports": "dnxcore50",

"dependencies": {

"NETStandard.Library": "1.6.0"

"System.Reflection.TypeExtensions": "4.1.0"

}

},

"net452": {}

}

}

The Common project is a dependency regardless of what the target framework is during the build process but the "System.Reflection.TypeExtensions" package is also required when building for netstandard (but not .NET 4.5.2), as this includes extensions methods for Type such as "GetMethod" and "GetProperty".

Note: Since these are extension methods in netstandard, a "using System.Reflection;" statement is required at the top of the class - this is not required when building for .NET 4.5.2 because "GetMethod" and "GetProperty" are instance methods on Type.

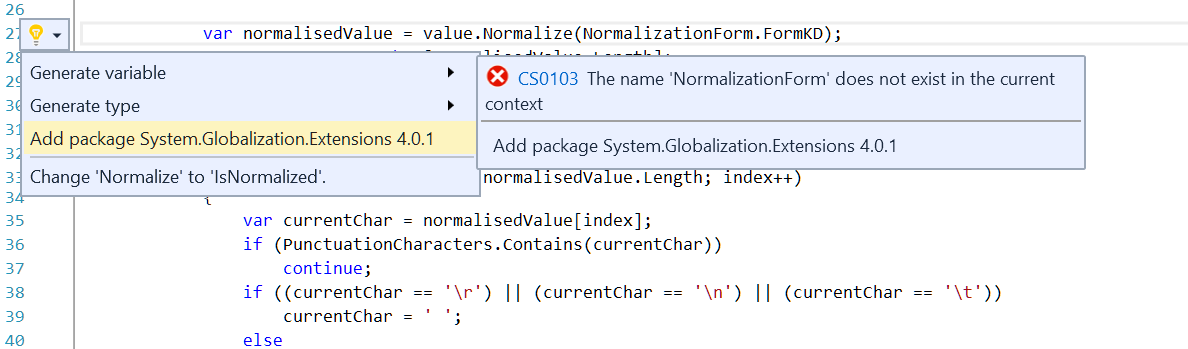

There was one other dependency that was required for Core to build - "System.Globalization.Extensions". This was because the DefaultStringNormaliser class includes the line

var normalisedValue = value.Normalize(NormalizationForm.FormKD);

which resulted in the error

'string' does not contain a definition for 'Normalize' and no extension method 'Normalize' accepting a first argument of type 'string' could be found (are you missing a using directive or an assembly reference?)

This is another case of functionality that is in .NET 4.5.2 but that is an optional package for .NET Core. Thankfully, it's easy to find out what additional package needs to be included - the "lightbulb" code fix options will try to look for a package to resolve the problem and it correctly identifies that "System.Globalization.Extensions" contains a relevant extension method (as illustrated below).

Note: Selecting the "Add package System.Globalization.Extensions 4.0.1" option will add the package as a dependency for netstandard in the project.json file and it will add the required "using System.Globalization;" statement to the class - which is very helpful of it!

All that remained now was to use the workaround from before to add the .NET Core version of the "Core" project as a reference to the projects that required it.

The third and fourth projects (both class libraries)

The process for the "Helpers" and "Querier" class libraries was simple. Neither required anything that wasn't included in netstandard1.6 and so it was just a case of going through the motions.

The "Tester" Console Application

At this point, all of the projects that constituted the actual "Full Text Indexer" were building for both the netstandard1.6 framework and .NET 4.5.2 - so I could have stopped here, really (aside from the serialisation issues I had been putting off). But I thought I might as well go all the way and see if there were any interesting differences in migrating Console Applications and xUnit test suite projects.

For the Tester project; no, not much was different. It has an end-to-end example integration where it loads data from a Sqlite database file of Blog posts using Dapper and then creates a search index. The posts contain markdown content and so three NuGet packages were required - Dapper, System.Data.Sqlite and MarkdownSharp.

Dapper supports .NET Core and so that was no problem but the other two did not. Thankfully, though, there were alternatives that did support netstandard - Microsoft.Data.Sqlite and Markdown. Using Microsoft.Data.Sqlite required some (very minor) code changes while Markdown exposed exactly the same interface as MarkdownSharp.

The xUnit Test Suite Project

The "UnitTests" project didn't require anything very different but there are a few gotchas to watch out for..

The first is that you need to create a "Console Application (.NET Core)" project since xUnit works with the "netcoreapp1.0" framework (which console applications target) and not "netstandard1.6" (which is what class libraries target).

The second is that, presuming you want the Visual Studio test runner integration (which, surely, you do!) you need to not only add the "xunit" NuGet package but also the "dotnet-test-xunit" package. Thirdly, you need to enable the "Include prerelease" option in the NuGet Package Manager to locate versions of these packages that work with .NET Core (this will, of course, change with time - but as of November 2016 these packages are only available as "prereleases").

Fourthly, you need to add a line

"testRunner": "xunit",

to the project.json file.

Having done all of this, the project should compile and the tests should appear in the Test Explorer window.

Note: I wanted to fully understand each step required to create an xUnit test project but you could also just follow the instructions at Getting started with xUnit.net (.NET Core / ASP.NET Core) which provides you a complete project.json to paste in - one of the nice things about .NET Core projects is that changing (and saving) the project.json is all it takes to change from being a class library (and targeting netstandard) to being a console application (and targeting netcoreapp). Similarly, references to other projects and to NuGet packages are all specified there and saving changes to that project file results in those reference immediately being resolved and any specified packages being downloaded.

In the class library projects, I made them all target both netstandard and net452. With the test suite project, if the project.json file is changed to target both .NET Core ("netcoreapp1.0", since it's a console app) and full fat .NET ("net452") then two different versions of the suite will be built. The clever thing about this is that if you use the command line to run the tests -

dotnet test

.. then it will run the tests in both versions. Since there are going to be some differences between the different frameworks and, quite feasibly, between different versions of dependencies then it's a very handy tool to be able to run the tests against all of the versions of .NET that your libraries target.

There is a "but" here, though. While the command line test process will target both frameworks, the Visual Studio Test Explorer doesn't. I think that it only targets the first framework that is specified in the project.json file but I'm not completely sure. I just know that it doesn't run them both. Which is a pity. On the bright side, I do like that .NET Core is putting the command line first - not only because I'm a command line junkie but also because it makes it very easy to integrate into build servers and continuous integration processes. I do hope that one day (soon) that the VS integration will be as thorough as the command line tester, though.

Building NuGet packages

So, now, there are no errors and everything is building for .NET Core and for "classic"* .NET.

* I'm still not sure what the accepted terminology is for non-.NET-Core projects, I don't really think that "full fat framework" is the official designation :)

There are no nasty workarounds required for the references (like when the not-yet-migrated .NET 4.5.2 projects were referencing the .NET Core projects). It's worth mentioning that that workaround was only required when the .NET 4.5.2 project wanted to reference a .NET Core project from within the same solution - if the project that targeted both "netstandard1.6" and "net452" was built into a NuGet package then that package could be added to a .NET Core project or to a .NET 4.5.2 project without any workarounds. Which makes me think that now is a good time to talk about building NuGet packages from .NET Core projects..

The project.json file has enough information that the "dotnet" command line can create a NuGet package from it. So, if you run the following command (you need to be in the root of the project that you're interested in to do this) -

dotnet pack

.. then you will get a NuGet package built, ready to distribute! This is very handy, it makes things very simple. And if the project.json targets both netstandard1.6 and net452 then you will get a NuGet package that may be added to either a .NET Core project or a .NET 4.5.2 (or later) project.

I hadn't created the Full Text Indexer as a NuGet package before now, so this seemed like a good time to think about how exactly I wanted to do it.

There were a few things that I wanted to change with what "dotnet pack" gave me at this point -

- The name and ID of the package comes from the project name, so the Core project resulted into a package named "Core", which is too vague

- I wanted to include additional metadata in the packages such as a description, project link and icon url

- If each project would be built into a separate package then it might not be clear to someone what packages are required and how they work together, so it probably makes sense to have a combined package that pulls in everything

For points one and two, the "project.json reference" documentation has a lot of useful information. It describes the "name" attribute -

The name of the project, used for the assembly name as well as the name of the package. The top level folder name is used if this property is not specified.

So, it sounds like I could add a line to the Common project -

"name": "FullTextIndexer.Common",

.. which would result in the NuGet package for "Common" having the ID "FullTextIndexer.Common". And it does!

However, there is a problem with doing this.

The "Common" project is going to be built into a NuGet package called "FullTextIndexer.Common" so the projects that depend upon it will need updating - their project.json files need to change the dependency from "Common" to "FullTextIndexer.Common". If the Core project, for example, wasn't updated to state "FullTextIndexer.Common" as a dependency then the "Core" NuGet package would have a dependency on a package called "Common", which wouldn't exist (because I want to publish it as "FullTextIndexer.Common"). The issue is that if Core's project.json is updated to specify "FullTextIndexer.Common" as a dependency then the following errors are reported:

NuGet Package Restore failed for one or more packages. See details in the Output window.

The dependency FullTextIndexer.Common >= 1.0.0-* could not be resolved.

The given key was not present in the dictionary.

To cut a long story short, after some trial and error experimenting (and having been unable to find any documentation about this or reports of anyone having the same problem) it seems that the problem is that .NET Core dependencies within a solution depend upon the project folders having the same name as the package name - so my problem was that I had a project folder called "Common" that was building a NuGet package called "FullTextIndexer.Common". Renaming the "Common" folder to "FullTextIndexer.Common" fixed it. It probably makes sense to keep the project name, package name and folder name consistent in general, I just wish that the error messages had been more helpful because the process of discovering this was very frustrating!

Note: Since I renamed the project folder to "FullTextIndexer.Common", I didn't need the "name" option in the project.json file and so I removed it (the default behaviour of using the top level folder name is fine).

The project.json reference made the second task simple, though, by documenting the "packOptions" section. To cut to the chase, I changed the Common's project.json to the following:

{

"version": "1.0.0-*",

"packOptions": {

"iconUrl": "https://secure.gravatar.com/avatar/6a1f781d4d5e2d50dcff04f8f049767a?s=200",

"projectUrl": "https://bitbucket.org/DanRoberts/full-text-indexer",

"tags": [ "C#", "full text index", "search" ]

},

"authors": [ "ProductiveRage" ],

"copyright": "Copyright 2016 ProductiveRage",

"dependencies": {},

"frameworks": {

"netstandard1.6": {

"imports": "dnxcore50",

"dependencies": {

"NETStandard.Library": "1.6.0"

}

},

"net452": {}

}

}

I updated the other class library projects similarly and updated the dependency names on all of the projects in the solution so that everything was consistent and compiling.

Finally, I created an additional project named simply "FullTextIndexer" whose only role in life is to generate a NuGet package that includes all of the others (it doesn't have any code of its own). Its project.json file looks like this:

{

"version": "1.0.0-*",

"packOptions": {

"summary": "A project to try implementing a full text index service from scratch in C# and .NET Core",

"iconUrl": "https://secure.gravatar.com/avatar/6a1f781d4d5e2d50dcff04f8f049767a?s=200",

"projectUrl": "https://bitbucket.org/DanRoberts/full-text-indexer",

"tags": [ "C#", "full text index", "search" ]

},

"authors": [ "ProductiveRage" ],

"copyright": "Copyright 2016 ProductiveRage",

"dependencies": {

"FullTextIndexer.Common": "1.0.0-*",

"FullTextIndexer.Core": "1.0.0-*",

"FullTextIndexer.Helpers": "1.0.0-*",

"FullTextIndexer.Querier": "1.0.0-*"

},

"frameworks": {

"netstandard1.6": {

"imports": "dnxcore50",

"dependencies": {

"NETStandard.Library": "1.6.0"

}

},

"net452": {}

}

}

One final note about NuGet packages before I move on - the default behaviour of "dotnet pack" is to build the project in Debug configuration. If you want to build in release mode then you can use the following:

dotnet pack --configuration Release

"Fixing" the serialisation problem

Serialisation in .NET Core seems to a bone of contention - the Microsoft Team are sticking to their guns in terms of not supporting it and, instead, promoting other solutions:

Binary Serialization

Justification. After a decade of servicing we've learned that serialization is incredibly complicated and a huge compatibility burden for the types supporting it. Thus, we made the decision that serialization should be a protocol implemented on top of the available public APIs. However, binary serialization requires intimate knowledge of the types as it allows to serialize object graphs including private state.

Replacement. Choose the serialization technology that fits your goals for formatting and footprint. Popular choices include data contract serialization, XML serialization, JSON.NET, and protobuf-net.

(from "Porting to .NET Core")

Meanwhile, people have voiced their disagreement in GitHub issues such as "Question: Serialization support going forward from .Net Core 1.0".

The problem with recommendations such as Json.NET) and protobuf-net is that they require changes to code that previously worked with BinaryFormatter - there is no simple switchover. Another consideration is that I wanted to see if it was possible to migrate my code over to supporting .NET Core while still making it compatible with any existing installation, such that it could still deserialise any disk-cached data that had been persisted in the past (the chances of this being a realistic use case are exceedingly slim - I doubt that anyone but me has used the Full Text Indexer - I just wanted to see if it seemed feasible).

For the sake of this post, I'm going to cheat a little. While I have come up with a way to serialise index data that works with netstandard, it would probably best be covered another day (and it isn't compatible with historical data, unfortunately). A good-enough-for-now approach was to use "conditional directives", which are basically a way to say "if you're building in this configuration then include this code (and if you're not, then don't)". This allowed me the restore all of the [Serializable] attributes that I removed earlier - but only if building for .NET 4.5.2 (and not for .NET Core). For example:

#if NET452

[Serializable]

#endif

public class Whatever

{

The [Serializable] attribute will be included in the binaries for .NET 4.5.2 and not for .NET Core.

Update (9th March 2021): This isn't actually true any more - the BinaryFormatter and Serializable attribute are available in more current versions of .NET Standard and I've recently updated the FullTextIndexer NuGet packages to target such a version. There remains an IndexDataJsonSerialiser class in the FullTextIndexer.Serialisation.Json package, though, which you may find preferable as Microsoft strongly warns against using the BinaryFormatter (it only applies to untrusted input, which is not an expected use case for deserialisation of index data but you still may wish to err on the safe side).

You need to be careful with precisely what conditions you specify, though. When I first tried this, I used the line "if #net452" (where the string "net452" is consistent with the framework target string in the project.json files) but the attribute wasn't being included in the .NET 4.5.2 builds. There was no error reported, it just wasn't getting included. I had to look up the supported values to see if I'd made a silly mistake and it was the casing - it needs to be "NET452" and not "net452".

I used the same approach to restore the ISerializable implementations that some classes had and I used it to conditionally compile the entirety of the IndexDataSerialiser (which I got back out of my source control history, having deleted it earlier).

This meant that if the "FullTextIndexer" package is added to a project building for the "classic" .NET framework then all of the serialisation options that were previously available still will be - any disk-cached data may be read back using the IndexDataSerialiser. It wouldn't be possible if the package is added to a .NET Core project but this compromise felt much better than nothing.

Final tweaks and parting thoughts

The migration is almost complete at this point. There's one minor thing I've noticed while experimenting with .NET Core projects; if a new solution is created whose first project is a .NET Core class library or console application, the project files aren't put into the root of the solution - instead, they are in a "src" folder. Also, there is a "global.json" file in the solution root that enables.. magic special things. If I'm being honest, I haven't quite wrapped my head around all of the potential benefits of global.json (though there is an explanation of one of the benefits here; The power of the global.json). What I'm getting around to saying is that I want my now-.NET-Core solution to look like a "native" .NET Core solution, so I tweaked the folder structure and the .sln file to be consistent with a solution that had been .NET Core from the start. I'm a fan of consistency and I think it makes sense to have my .NET Core solution follow the same arrangement as everyone else's .NET Core solutions.

Having gone through this whole process, I think that there's an important question to answer: Will I now switch to defaulting to supporting .NET Core for all new projects?

.. and the answer is, today, if I'm being honest.. no.

There are just a few too many rough edges and question marks. The biggest one is the change that's going to happen away from "project.json" and to a variation of the ".csproj" format. I'm sure that there will be some sort of simple migration tool but I'd rather know for sure what the implications are going to be around this change before I commit too much to .NET Core.

I'm also a bit annoyed that the Productivity Power Tools remove-and-sort-usings-on-save doesn't work with .NET Core projects (there's an issue on GitHub about this but it hasn't bee responded to since August 2016, so I'm not sure if it will get fixed).

Finally, I'm sure I read an issue around analysers being included in NuGet packages for .NET Core - that they weren't getting loaded correctly. I can't find the issue now so I've done some tests to try to confirm or deny the rumour.. I've got a very simple project that includes an analyser and whose package targets both .NET 4.5 and netstandard1.6 and the analyser does seem to install correctly and be included in the build process (see ProductiveRage.SealedClassVerification) but I still have a few concerns; in .csproj files, analyser are all explicitly referenced (and may be enabled or disabled in the Solution Explorer by going into References/Analyzers) but I can't see how they're referenced in .NET Core projects (and they don't appear in the Solution Explorer). Another (minor) thing is that, while the analyser does get executed and any warnings displayed in the Error List in Visual Studio, there are no squigglies underlining the offending code. I don't know why that is and it makes me worry that the integration is perhaps a bit flakey. I'm a big fan of analysers and so I want to be sure that they are fully supported*. Maybe this will get tidied up when the new project format comes about.. whenever that will be.

* (Update: Having since added a code fix to the "SealedClassVerification" analyser, I've realised that the no-squigglies-in-editor problem is worse than I first thought - it means that the lightbulb for the code fix does not appear in the editor and so the code fix can not be used. I also found the GitHub issue that I mentioned: "Analyzers fail on .NET Core projects", it says that improvements are on the way "in .NET Core 1.1" which should be released sometime this year.. maybe then will improve things)

I think that things are close (and I like that Microsoft is making this all available early on and accepting feedback on it) but I don't think that it's quite ready enough for me to switch to it full time yet.

Finally, should you be curious at all about the Full Text Indexer project that I've been talking about, the source code is available here: bitbucket.org/DanRoberts/full-text-indexer and there are a range of old posts that I wrote about how it works (see "The Full Text Indexer Post Round-up").

Posted at 13:38

JavaScript Compression (Putting my JSON Search Indexes on a diet)

When I wrote a couple of weeks ago about writing a way to consume Full Text Indexer data entirely on the client (so that I could recreate my blog's search functionality at productiverage.neocities.org - see The Full Text Indexer goes client-side!), I said that

Yes, the search index files are bigger than I would have liked..

This may have been somewhat of an understatement.

As a little refresher; there is one JSON file responsible for matching posts to search terms ("oh, I see you searched for 'cats'! The posts that you want are, in descending order of match quality: 26, 28, 58, 36, 27, 53, 24"). Then there is a JSON file per-post for all of the source location mappings. These describe precisely where in the posts that tokens may be matched. There's quite a lot of data to consider and it's in a fairly naive JSON structure (I used single letter property names but that was the only real attempt at size-constraining*). On top of this, there's a plain-text representation of each post so that the source location mappings can be used to generate a best match "content excerpt" for each result (à la Google's results, which highlight matched terms). And finally a JSON file for all of the titles of the posts so that the titles can be displayed when the search logic knows which posts match the query but the content excerpt generation is still being worked on in the background.

This results in 5.8MB of JSON and plain text data.

Now, in fairness, this is all gzip'd when it comes down the wire and this sort of data compresses really well. And the detail files for the posts are only requested when the corresponding posts are identified as being results for the current search. So in terms of what the user has to download, it's no big deal.

However, the hosting at neocities.org only offers 10MB at the moment so 5.8MB solely for searching seems a little excessive. To put it into perspective, this is several times more than the html required to render all of the actual posts!

I hadn't actually realised just how much of a problem it was until I published my last post. When I came to re-generate the flattened content to upload to NeoCities, that last post (being something of a beast) pushed the storage requirements past the magic 10MB mark!

* (Very minor diversion: There was actually one potential optimisation that I did consider when first serialising the index data for use on the client. Instead of a simple associative array, I wondered if using a Ternary Search Tree would reduce the space requirements if a lot of the keys had similarities. I used a TST internally in the C# project for performance reasons and talked about it in The .Net Dictionary is FAST!. Alas, when I tried it as a structure for my data, it resulted in slightly larger files).

So. Compression, yeah? In JavaScript??

The biggest contributors to size are the per-post search index files that contain the source locations data. Each is JSON data describing an associative array matching a "normalised" search term (to take into account plurality, case-insensitivity, etc) to a post key, weight and array of source locations. Each source location has the fields FieldIndex, TokenIndex, ContentIndex and ContentLength (read all about it at The Full Text Indexer: Source Locations).

I know that this data can be compressed since, not only does textual or JSON data frequently lend itself to being effectively compressed, but I can see the compression that gzip achieves when the data is delivered to the browser.

My first thought when it comes to compression is that we're dealing with binary data. Certainly, when I see gzip'd responses in Fiddler (before using the "Response is encoded.. Click here to transform" link) it looks like gobbledygook, not like any text I know!

This reminded me of something I read some time ago about a guy creating a PNG file where the pixels are generated from bytes extracted from textual content. This PNG can be read by javascript and the pixels extracted again, and from the pixel values the textual source content can be recreated. The real benefit with PNG here is that it incorporates a lossless compression scheme. Lossless compression is really important here, it means that the decompressed content will be identical to the source content. JPEG is a lossy scheme and can achieve higher compression rates if the quality is reduced sufficiently. But it loses information when it does this. The idea is that the resulting image is either acceptably close visually to the source image or that the discrepancy is evident but thought to be a worthwhile trade-off considering the file size benefits. If we're trying to extract text data that represents javascript than "close-ish, maybe" is not going to cut it!

The original article that I'd remembered was this: Compression using Canvas and PNG-embedded data. The results sound impressive, he got an older version of jQuery (1.2.3) compressed down from 53kb to 17kb. Bear in mind that this is already the minified version of the code! It's quite a short article and interesting, so give it a visit (and while you're there notice that the Mario background is interactive and can be played using the cursor keys! :)

The summary of the article, though, is that it's not suitable for mainstream use. Browser support is restricted (all modern browsers now would work, I'm sure, but I don't know how many versions of IE it would work in). And it concludes with "this is meant only as thing of interest and is not something you should use in most any real life applications, where something like gzip will outperform this".

Ok.

Glad I looked it up again, though, since it was interesting stuff.

On the same blog, there's also an article Read EXIF data with Javascript, which talks about retrieving binary data from a file and extracting the data from it. In this case, the content would have to be compressed when written and then decompressed by the javascript loading it. Unlike, the PNG approach, compression doesn't come for free. From the article, he's written the file binaryajax.js. Unfortunately, browser support apparently is still incomplete. The original plan outlined works for Chrome and Firefox but then some dirty hacks (including rendering VBScript script tags and executing those functions) are required for IE and, at the time at least, Opera wouldn't work at all.

Again, interesting stuff! But not quite what I want.

Help, Google!

So I had to fall back to asking google about javascript compression and trying not to end up in article after article about how to minify scripts.

In fairness, it didn't take too long at all until a pattern was emerging where a lot of people were talking about LZ compression (info available at the Wikipedia page). And I finally ended up here lz-string: JavaScript compression, fast!

From that page -

lz-string was designed to fulfill the need of storing large amounts of data in localStorage, specifically on mobile devices. localStorage being usually limited to 5MB, all you can compress is that much more data you can store.

What about other libraries?

All I could find was:

- some LZW implementations which gives you back arrays of numbers (terribly inefficient to store as tokens take 64bits) and don't support any character above 255.

- some other LZW implementations which gives you back a string (less terribly inefficient to store but still, all tokens take 16 bits) and don't support any character above 255.

- an LZMA implementation that is asynchronous and very slow - but hey, it's LZMA, not the implementation that is slow.

- a GZip implementation not really meant for browsers but meant for node.js, which weighted 70kb (with deflate.js and crc32.js on which it depends).

This is sounding really good (and his view of the state of the other libraries available reflects my own experiences when Googling around). And he goes on to say

localStorage can only contain JavaScript strings. Strings in JavaScript are stored internally in UTF-16, meaning every character weight 16 bits. I modified the implementation to work with a 16bit-wide token space.

Now, I'm not going to be using it with localStorage but it's gratifying to see that this guy has really understood the environment in which it's going to be used and how best to use that to his advantage.

Preliminary tests went well; I was compressing this, decompressing that, testing this, testing that. It was all going swimmingly! The only problem now was that this was a clearly a custom (and clever) implementation of the algorithm so I wouldn't be able to use anything standard on the C# side to compress the data if I wanted the javascript to be able to decompress it again. And the whole point of all of this is to "flatten" my primary blog and serialise the search index in one process, such that it can be hosted on NeoCities.

The javascript code is fairly concise, so I translated it into C#. When I'd translated C# classes from my Full Text Indexer into javascript equivalents, it had gone surprisingly painlessly. I'd basically just copied the C# code into an empty file and then removed types and tweaked things to work as javascript. So I thought I'd take a punt and try the opposite - just copy the javascript into an empty C# class and then try to fix it up. Adding appropriate types and replacing javascript methods with C# equivalents. This too seemed to go well, I was compressing some strings to text files, pulling them with ajax requests in javascript, decompressing them and viewing them. Success!

Until..

I gave it a string that didn't work. The decompress method returned null. Fail.

Troubleshooting

I figured that there's only so much that could be going wrong. If I compressed the same string with the javascript code then the javascript code could decompress it just fine. The data compressed with the C# version refused to be decompressed by the javascript, though. Chance are I made a mistake in the translation.

I got the shortest reproduce string I could (it's from the titles-of-all-posts JSON that the search facility uses) -

{"1":"I lo

and got both the C# and javascript code to print out a list of character codes that were generated when that string was compressed.

These were identical. So maybe my translation wasn't at fault.

Well something must be getting lost somewhere!

This led me on to wondering if it was somehow the encoding. The compressed content is being served up as UTF8 (basically the standard on the web) but the compression algorithm is intended to compress to UTF16. Now, surely this won't make a difference? It means that the bits sent over the wire (in UTF8) will not be the exact same bits as the javascript string (UTF16) is represented by when it's received, but these encoded bits should be describing the same character codes.

So the next step was to intercept the ajax request that the javascript client was making for the data (compressed by C# code, delivered over the wire with UTF8 encoding) and to write out the character codes at that point.

And there was a discrepancy! The character codes received were not the same that I'd generated by the C# code and that I thought I was transmitting!

Thinking still that this must somehow be encoding-related, I started playing around with the encoding options when writing the data to disk. And noticed, innocently hidden away in an alternate constructor signature, the System.Text.UTF8Encoding class has an option to "throwOnInvalidBytes". What is this?

I knew how UTF8 worked, that it uses a variable number of bytes and uses the most-signficant-bits to describe how many bytes are required for the current character (the Wikipedia article explains it nicely) and thought that that was pretty much all there was to it. So how could a byte be invalid?? Well, with this constructor argument set to true, I was getting the error

Unable to translate Unicode character \uD900 at index 6 to specified code page.

so clearly particular bytes can be invalid somehow..

UTF Limitations

With this error, it didn't actually take much searching. There's a link on www.unicode.com; Are there any 16-bit values that are invalid? that states that

Unpaired surrogates are invalid in UTF8. These include any value in the range D80016 to DBFF16 not followed by a value in the range DC0016 to DFFF16, or any value in the range DC0016 to DFFF16 not preceded by a value in the range D80016 to DBFF16

I spent a little while wandering through various searches on the internet trying to decide what the best way would be to try to address this. I didn't want to have to try to find another compressor for all of the reasons that the author of the one I'm using outlined! Which made me think, maybe there's more information about this on his site.

Lo and behold, in the changelog (at the bottom of that page), there's mention that there's a v1.3.0 available that has additional methods compressToUTF16 and decompressToUTF16 ("version 1.3.0 now stable") that "allow lz-string to produce something that you can store in localStorage on IE and Firefox".

These new methods wrap the methods "compress" and "decompress". But the "compress" and "decompress" methods in this new version of the code look different to those in the code that I had been using (and had translated). But it's no big deal to translate the newer version (and the new methods).

And now it works! I wish that this version had been the file you see when you go to the main lz-string GitHub page rather than being hidden in the "libs" folder. But considering how happy I am that the solution to my problem has been provided to me with little-to-no-effort, I'm really not going to complain! :)

Incorporating it into the solution

Step 1 was to alter my Blog-to-NeoCities Transformer to write compressed versions of the per-post source location mappings data, along with compressed versions of the plain text post data and the JSON that has the titles for each post.

The C# translation of the LZString code can be seen at: LZStringCompress.cs.

Step 2 was to alter the javascript search code to handle the compressed content. Having included the 1.3.0 version of LZString.js, I needed to replace some of the $.ajax requests with calls to one of

function LoadCompressedData(strUrl, fncSuccess, fncFailure) {

// Note: I've seen this fail when requesting files with extension ".js" but work when the exact

// same file is renamed to ".txt", I'm not sure if this is in IIS that it's failing or if jQuery

// is requesting the data in a slightly different manner (despite the explicit dataType option),

// so best just ensuring that all LZ-compressed data is stored in a file with a ".txt" extension.

$.ajax({

url: strUrl,

dataType: "text",

success: function(strCompressedContent) {

var strContent;

try {

strContent = LZString.DecompressFromUTF16(strCompressedContent);

}

catch(e) {

if (fncFailure) {

fncFailure(e);

}

return;

}

fncSuccess(strContent);

}

});

}

function LoadCompressedJsonData(strUrl, fncSuccess, fncFailure) {

LoadCompressedData(

strUrl,

function(strContent) {

var objData;

try {

eval("objData = " + strContent);

}

catch(e) {

if (fncFailure) {

fncFailure(e);

}

return;

}

fncSuccess(objData);

},

function() {

if (fncFailure) {

fncFailure(arguments);

}

}

);

}

Step 3 is.. there is no step 3! Everything was now working but taking up less space on the hosting.

When compressed, the detailed source location mappings data is reduced from a combined 4.7MB to 1.5MB. The plain text content was reduced from 664kb to 490kb (not as much of a saving as I'd expected, to be honest). The titles JSON shrank marginally from 2.58kb to 2.36kb. The summary data JSON wasn't compressed so that the first stage of the search could be performed as quickly as possible and one non-compressed file on the server was no problem (it's still gzip'd when passed to the client, though, so there's no bandwidth cost). In total, 5.3MB of data was condensed into requiring less than 2MB on the server. Which I am happily marking down as a win :)

So here's to me hopefully fitting many more posts (along with all of the related javascript searching data) into the NeoCities hosting limitations! I'm sure that if I ever start pushing that 10MB point again, by then the 10MB limit will have been raised - 20MB is already on the way!

Posted at 23:22

The NeoCities Challenge! aka The Full Text Indexer goes client-side!

When I heard about NeoCities last week, I thought it was a cool idea - offering some back-to-basics hosting for an outlay of absolutely zero. Yeah, the first thing that came to mind was those geocities sites that seemed to want to combine pink text, lime green backgrounds and javascript star effects that chase the cursor.. but that's just nostalgia! :)

The more I thought about it, the more I sort of wondered whether I couldn't host this blog there. I'm happy with my hosting, it's cheap and fast, but I just liked the idea of creating something simple with the raw ingredients of html, css and javascript alone. I mean, it should be easy enough to flatten the site so that all of the pages are single html files, with a different file per month, per Post, per tag.. the biggest stumbling blog was the site search which is powered by my Full Text Indexer project. Obviously that won't work when everything is on the client, when there is no server backend to power it. Unless..

.. a client-side Full Text Indexer Search Service.. ??

Challenge accepted!! :D

NeoCities Hosting

Before I get carried away, I'll breeze through the NeoCities basics and how I got most of my site running atNeoCities. I initially thought about changing the code that runs my site server-side to emit a flattened version for upload. But that started to seem more and more complicated the more I thought about it, and seemed like maintaining it could be a headache if I changed the blog over time.

So instead, I figured why not treat the published blog (published at www.productiverage.com) as a generic site and crawl it, grab content, generate new urls for a flattened structure, replace urls in the existing content with the new urls and publish it like that. How hard could it be??

Since my site is almost entirely html and css (well, LESS with some rules and structure, but it compiles down to css so why be fussy) with only a smattering of javascript, this should be easy. I can use the Html Agility Pack to parse and alter html and I very conveniently have a CSS Parser that can be used to update urls (like backgrounds) in the stylesheets.

And, in a nutshell, that's what I've done. The first version of this NeoCities-hosted blog hid the site search functionality and ran from pure html and css. I had all of the files ready to go in a local IIS site as a proof of concept. Then I went to upload them.. With the Posts and the Archive and Tags pages, there were 120-something files. I'd only used the uploader on the NeoCities page to upload a single test file up to this point and so hadn't realised that that's all it would let you do; upload a single file at a time. Even if I was willing to upload all of the files individually now (which, I must admit, I did; I was feeling overexcitable about getting the first version public! :) this wouldn't scale, I couldn't (wouldn't) do it every time I changed something - a single new Post could invalidate many Pages, considering the links between Posts, the Monthly Archive pages, the Tags pages, etc..

I spent a few minutes googling to see if anyone else could offer a sensible solution and came up empty.

So Plan B was to have a look with Fiddler to see what the upload traffic looked like. It seemed fairly straight-forward, an authorisation cookie (to identify who I was logged in as) and a "csrf_token" form value, along with the uploaded file's content. I was familiar with the phrase "Cross Site Request Forgery" (from "csrf_token") but didn't really know what it meant in this context. I thought I'd take a punt and try manipulating the request to see if that token had to vary between uploads and it didn't seem to (Fiddler lets you take a request, edit it and broadcast it - so uploading a text file provided an easy to mess-with request, I could change one character of the file and leave everything else, content-length included, the same and refresh the browser to see if the new content had arrived).

This was enough to use the .net WebRequest class and upload files individually. I wrote something to loop over the files in my local version of the site and upload them all.. and it worked! There were some stumbling blocks with getting the cookie sent and specifying the form value and the file to upload but StackOverflow came to the rescue each time. There is one outstanding issue that each upload requests received a 500 Server Error response even though it was successful, but I chose to ignore that for now - yes, this approach is rough around the edges but it's functional for now!

In case this is useful to anyone else, I've made the code available at Bitbucket: The BlogToNeocitiesTransformer.

If you plug in the auth cookie and csrf token values into the (C#) console application (obtaining those values by looking at a manual upload request in Fiddler still seems like the only way right now) then you can use it yourself, should you have need to. That app actually does the whole thing; downloads a site's content, generates a flattened version (rewriting the html and css, ensuring the urls follow NeoCities' filename restrictions) and then uploads it all to a NeoCities account.

Temporary Measures

Thankfully, this file upload situation looks to only be temporary. Kyle Drake (NeoCities' proud father) has updated the blog today with NeoCities can now handle two million web sites. Here's what we're working on next. In there he says that the file upload process is going to be improved with "things like drag-and-drop file uploading, and then with an API to allow developers to write tools to upload files", which is excellent news! He also addresses the limits on file types that may be uploaded. At the moment "ico" is not in the white list of allowed extensions and so I can't have a favicon for my blog at NeoCities. But this is soon to be replaced with a "black list" (to block executables and the like) so my favicon should soon be possible* :)

* (I half-contemplated, before this announcement, a favicon in another format - such as gif or png - but it seems that this counts out IE and I wanted a solution for all browsers).

Hopefully this black listing approach will allow me to have an RSS feed here as well - essentially that's just an xml file with an xslt to transform the content into a nice-to-view format. And since xslt is just xml I thought that it might work to have an xslt reference in the xml file that has an xml extension itself. But alas, I couldn't get it working, I just kept getting a blank screen in Chrome although "view source" showed the content was present. I'll revisit this when the file restrictions have been changed.

Update (25th July 2013): The NeoCities interface has been updated so that multiple files can be uploaded by dragging-and-dropping! I still can't upload my favicon yet though..

Update (12th August 2014): I'm not sure when, exactly, but favicons are now support (any .ico file is, in fact). It's a little thing but I do think it adds something to the identity of a site so I'm glad they changed this policy.

Site Search!

This was all well and good, but at this point the site search was missing from the site. This functionality is enabled by server-side code that takes a search string, tries to find matching Posts and then shows the results with Post titles and content excerpts with the matching term(s) highlighted. The matching is done against an index of tokens (possible words) so that the results retrieval can be very fast. The index records where in the source content it matches the token, which enables the except-highlighting. It has support for plurality matching (so it knows that "cat" and "cats" can be considered to be the same word) and has some other flexibility with ignoring letter case, ignoring accents on characters, ignoring certain characters (so "book's" is considered the same as "books") and search term splitting (so "cat posts" matches Posts with "cat" and "posts" in, rather than requiring that a Post contain the exact phrase "cat posts").

But the index is essentially a string-key dictionary onto the match data (the C# code stores it as a ternary search tree for performance but it's still basically just a dictionary). Javascript loves itself some associative arrays (all objects are associated arrays, basically bags of string-named properties) and associative arrays are basically string-key dictionaries. It seemed like the start of an idea!

If the index was still generated server-side (as it has to be for my "primary" blog hosting) then I should be able to represent this data as something that javascript could interpret and then perform the searching itself on the client..

The plan:

- Get the generated IIndexData<int> from my server-side blog (it's an int since the Posts all have a unique int "Id" property)

- Use the GetAllTokens and GetMatches methods to loop through each token in the data and generate JSON to represent the index, storing at this point only the Post Keys and their match Weight so that searching is possible

- Do something similar to get tokens, Keys, Weights and Source Locations (where in the source content the matches were identified) for each Post, resulting in detailed match data for each individual Post

- Get plain text content for each Post from the server-side data (the Posts are stored as Markdown in my source and translated into html for rendering and plain text for searching)

- Write javascript classes that can use this data to perform a search and then render the results with matched terms highlighted, just like the server-side code can do

- Profit!

(This is actually a slightly amended plan from the original, I tried first to generate a single JSON file with the detailed content for all Posts but it was over 4 meg uncompressed and still bigger than I wanted when delivered from NeoCities gzip'd. So I went for the file-with-summary-data-for-all-Posts and then separate files for detailed data for individual Posts).

I used JSON.Net for the serialisation (it's just the go-to for this sort of thing!) and used intermediary C# classes where each property was only a single character long to try to keep the size of the serialised data down. (There's nothing complicated about this, if more detail is of interest then the code can be found in the JsonSearchIndexDataRecorder class available on Bitbucket).

C# -> Javascript

So now I had a single JSON file for performing a search across all Posts, multiple JSON files for term-highlighting individual Posts and text files containing the content that the source locations mapped onto (also for term-highlighting). I needed to write a javascript ITokenBreaker (to use the parlance of the Full Text Indexer project) to reduce a search term into individual words (eg. "Cat posts" into "Cat" and "posts"), then an IStringNormaliser that will deal with letter casing, pluralisation and all that guff (eg. "Cat" and "posts" into "cat~" and "post~"). Then a way to take each of these words and identify Posts which contain all of the words. And finally a way to use ajax to pull in the detailed match data and plain text content to display the Post excerpts with highlighted search term matches.

To make things as snappy-feeling as possible, I wanted to display the title of matched Posts first while waiting for the ajax requests to deliver the match highlighting information - and for the content excerpts to be added in later.

The file IndexSearchGenerator.js takes a JSON index file and a search term, breaks the search term into words, "normalises" those words, identifies Posts that contain all of the normalised words and returns an array of Key, Weight and (if it was present in the data) Source Locations. It's only 264 lines of non-minified javascript and a lot of that is the mapping of accented characters to non-accented representations. (The Source Locations will not be present in the all-Posts summary JSON but will be in the per-Post detail JSON).

SearchTermHighlighter.js takes the plain text content of a Post, a maximum length for a content-excerpt to show and a set of Source Locations for matched terms and returns a string of html that shows the excerpt that best matches the terms, with those terms highlighted. And that's only 232 lines of non-minified, commented code. What I found particularly interesting with this file was that I was largely able to copy-and-paste the C# code and fudge it into javascript. There were some issues with LINQ's absence. At the start of the GetPlainTextContentAsHighlightedHtml method, for example, I need to get the max "EndIndex" of any of the highlighted terms - I did this by sorting the Source Locations data by the EndIndex property and then taking the EndIndex value of the last element of the array. Easy! The algorithm for highlighting (determining the best excerpt to take and then highlighting any terms, taking care that any overlapped terms don't cause problems) wasn't particularly complicated but it was fairly pleasant to translate it "by rote" and have it work at the end.

Finally, SearchPage.js fills in the gaps by determining whether you're on the search page and extracting (and url-decoding) the terms from the Query String if so, performing the initial search against the summary data (displaying the matched titles) and then making the ajax requests for the detailed data and rendering the match excerpts as they become available. Looping through the results, making ajax requests for detail data and handling the results for each Post when it is delivered is a bit like using a complicated asynchronous model in .net but in javascript this sort of async callback madness is parr for the course :) If this script decides that you're not on the search page then it makes an ajax request for the summary regardless so that it can be browser-cached and improve the performance of the first search you make (the entirety of the summary data is only 77k gzip'd so it's no big deal if you don't end up actually performing a search).

This last file is only 165 lines of commented, white-spaced javascript so the entire client-side implementation of the search facility is still fairly approachable and maintainable. It's effective (so long as you have javascript enabled!) and - now, bear with me if I'm just being overly impressed with my own creation - it looks cool performing the complicated search mechanics so quickly and fading in the matched excerpts! :)

Signing off

I'm really proud of this and had a lot of fun within the "NeoCities boundaries"! Yes, the source-site-grabbing-and-rewriting could be tidied up a bit. Yes, the file upload is currently a bit of a dodgy workaround. Yes, I still have a handful of TODOs about handling ajax failures in SearchPage.js. Yes, the search index files are bigger than I would have liked (significantly larger than not only the plain text content but also their full page html representations), which I may address by trying out a more complicated format rather than naive JSON (which was very easy). But it all works! And it works well. And the bits that are a bit untidy are only a bit untidy, on the whole they're robust and I'm sufficiently unashamed of them all that the code is all public!

Speaking of which, my blog is primarily hosted at www.productiverage.com* and I write about various projects which are hosted on my Bitbucket account. Among them, the source code for the Blog itself, for the Full Text Indexer which powers the server-hosted blog and which generates the source index data which I've JSON-ified, the CSSParser which I use in the rewriting / site-flattening process, the BlogToNeocitiesTransformer which performs the site-flattening and a few other things that I've blogged about at various times. Ok, self-promotion over! :)

* (If you're reading this at my primary blog address, the NeoCities version with the client-side search can be found at productiverage.neocities.org).

Posted at 20:21

The Full Text Indexer: Search Term Highlighting with Source Locations

I made reference last time (in The Full Text Indexer: Source Locations) to using Source Location data to implement search term highlighting and since adding this functionality to the Full Text Indexer, I've rewritten the search term highlighting mechanism on my blog to illustrate it.

The idea is that when a search is performed, the results page tries to show a snippet of each matching post with instances of the search term(s) highlighted. The source locations will describe where all of the matches are but it might not be possible to show all of the matches if the length of the summary snippet is restricted to, say, 300 characters. So a mechanism is required that can choose the best snippet to show; this might be the segment of content that contains the greatest number of matches, or the greatest cumulative weight of matches. It also needs to bear in mind that it's possible that none of the source locations will be in the post content itself; it's feasible that a search term could be present in a post's title but not its content at all!

Getting straight to it

The previous system that I had on my blog to perform this task was a bit complicated - it tried to determine all of the places in the content where matches might have occured (this was before source locations information was included in the Full Text Indexer data) and then generated all permutations of combinations of some or all of these matches in order to try to decide what was the best segment of the content to extract and display as the summary. The logic wasn't precisely the same as the Full Text Indexer's searching as I didn't want to go crazy on processing the content - so it wouldn't handle matches of plurals, for example. And the LINQ statement I carefully crafted over a few iterations to generate the permutations of possible matches seemed cryptic when I came back to look at it a few months later.

The new approach is much simpler:

- Sort the source location data by index (where the match appears in the content) and then by length of matched token

- Loop through the source data and build up chains of adjacent / overlapping matches where all of the matches can fit inside a segment that is no longer than maxLengthForHighlightedContent

- Construct each chain by starting with the current source location

- Then look ahead to the next source location (if any) and determine whether both it and the current source location fit within the maxLengthForHighlightedContent constraint

- Continue for the subsequent source locations (as soon as including one would exceed the maxLengthForHighlightedContent, stop looking - this works since they're sorted by index and length)

- Decide which of these source location chains will result in the best summary (if no chains could be constructed then return an empty set instead)

- Return a set of segments (index / length pairs) to highlight - no overlapping segments will be returned, any overlapping segments will be combined (this can make the actual highlighting of search terms much simpler)

The "best summary" is determined by an IComparer that considers different sets of source locations. The implementation I use runs through three metrics

- The combined MatchWeightContribution of the source location sets (the greater the better)

- If there are chains that the first metric can't differentiate between then consider the number of source locations in the chain (the lower the better, this must mean that the average weight of each match is greater)

- If a decision still hasn't been reached for a given comparison then consider the minimum SourceIndex (the lower the better, meaning the matching starts earlier in the content)

I will only be showing a search summary extracted from the post Content field although the search functionality also considers the Title as well as any Tags for the post. The first Content Retriever extracts content from a plain text version of the post's content so all source locations that relate to the Content field will have a SourceFieldIndex value of zero (I touched briefly on this last time but I'll explain in more detail further down in this post too).

So let's see the code! This is one of those pleasant cases where the code flows nicely from the outlined approach. I didn't go into detail above about how the overlap-prevention was handled but the code (hopefully!) illustrates more than adequately -

using System;

using System.Collections.Generic;

using System.Linq;

using FullTextIndexer.Common.Lists;

using FullTextIndexer.Core.Indexes;

namespace BlogBackEnd.FullTextIndexing

{

public static class SearchTermHighlighter

{

public static NonNullImmutableList<StringSegment> IdentifySearchTermsToHighlight(

string content,

int maxLengthForHighlightedContent,

NonNullImmutableList<SourceFieldLocation> sourceLocations,

IComparer<NonNullImmutableList<SourceFieldLocation>> bestMatchDeterminer)

{

if (content == null)

throw new ArgumentNullException("content");

if (maxLengthForHighlightedContent <= 0)

{

throw new ArgumentOutOfRangeException(

"maxLengthForHighlightedContent",

"must be greater than zero"

);

}

if (sourceLocations == null)

throw new ArgumentNullException("sourceLocations");

if (sourceLocations.Select(s => s.SourceFieldIndex).Distinct().Count() > 1)

throw new ArgumentException("All sourceLocations must have the same SourceFieldIndex");

if (bestMatchDeterminer == null)

throw new ArgumentNullException("bestMatchDeterminer");

// If there are no source locations there there is nothing to highlight

if (!sourceLocations.Any())

return new NonNullImmutableList<StringSegment>();

// Sort sourceLocations by index and then length

sourceLocations = sourceLocations.Sort((x, y) =>

{

if (x.SourceIndex < y.SourceIndex)

return -1;

else if (y.SourceIndex < x.SourceIndex)

return 1;

if (x.SourceTokenLength < y.SourceTokenLength)

return -1;

else if (y.SourceTokenLength < x.SourceTokenLength)

return 1;

return 0;

});

// Identify all combinations of source locations that can be shown at once without exceeding the

// maxLengthForHighlightedContent restraint

var sourceLocationChains = new NonNullImmutableList<NonNullImmutableList<SourceFieldLocation>>();

for (var indexOfFirstSourceLocationInChain = 0;

indexOfFirstSourceLocationInChain < sourceLocations.Count;

indexOfFirstSourceLocationInChain++)

{

var sourceLocationChain = new NonNullImmutableList<SourceFieldLocation>();

for (var indexOfLastSourceLocationInChain = indexOfFirstSourceLocationInChain;

indexOfLastSourceLocationInChain < sourceLocations.Count;

indexOfLastSourceLocationInChain++)

{

var startPoint = sourceLocations[indexOfFirstSourceLocationInChain].SourceIndex;

var endPoint =

sourceLocations[indexOfLastSourceLocationInChain].SourceIndex +

sourceLocations[indexOfLastSourceLocationInChain].SourceTokenLength;

if ((endPoint - startPoint) > maxLengthForHighlightedContent)

break;

sourceLocationChain = sourceLocationChain.Add(sourceLocations[indexOfLastSourceLocationInChain]);

sourceLocationChains = sourceLocationChains.Add(sourceLocationChain);

}

}

// Get the best source location chain, if any (if not, return an empty set) and translate into a

// StringSegment set

if (!sourceLocationChains.Any())

return new NonNullImmutableList<StringSegment>();

return ToStringSegments(

sourceLocationChains.Sort(bestMatchDeterminer).First()

);

}

private static NonNullImmutableList<StringSegment> ToStringSegments(

NonNullImmutableList<SourceFieldLocation> sourceLocations)

{

if (sourceLocations == null)

throw new ArgumentNullException("sourceLocations");

if (!sourceLocations.Any())

throw new ArgumentException("must not be empty", "sourceLocations");

var stringSegments = new NonNullImmutableList<StringSegment>();

var sourceLocationsToCombine = new NonNullImmutableList<SourceFieldLocation>();

foreach (var sourceLocation in sourceLocations.Sort((x, y) => x.SourceIndex.CompareTo(y.SourceIndex)))

{

// If the current sourceLocation overlaps with the previous one (or adjoins it) then they should

// be combined together (if there isn't a previous sourceLocation then start a new queue)

if (!sourceLocationsToCombine.Any()

|| (sourceLocation.SourceIndex

<= sourceLocationsToCombine.Max(s => (s.SourceIndex + s.SourceTokenLength)))

)

{

sourceLocationsToCombine = sourceLocationsToCombine.Add(sourceLocation);

continue;

}

// If the current sourceLocation marks the start of a new to-highlight segment then add any

// queued-up sourceLocationsToCombine content to the stringSegments set..

if (sourceLocationsToCombine.Any())

stringSegments = stringSegments.Add(new StringSegment(sourceLocationsToCombine));

// .. and start a new sourceLocationsToCombine list

sourceLocationsToCombine = new NonNullImmutableList<SourceFieldLocation>(new[] { sourceLocation });

}

if (sourceLocationsToCombine.Any())

stringSegments = stringSegments.Add(new StringSegment(sourceLocationsToCombine));

return stringSegments;

}

public class StringSegment

{

public StringSegment(NonNullImmutableList<SourceFieldLocation> sourceLocations)

{

if (sourceLocations == null)

throw new ArgumentNullException("sourceLocations");

if (!sourceLocations.Any())

throw new ArgumentException("must not be empty", "sourceLocations");

if (sourceLocations.Select(s => s.SourceFieldIndex).Distinct().Count() > 1)

throw new ArgumentException("All sourceLocations must have the same SourceFieldIndex");

Index = sourceLocations.Min(s => s.SourceIndex);

Length = sourceLocations.Max(s => (s.SourceIndex + s.SourceTokenLength) - Index);

SourceLocations = sourceLocations;

}

public int Index { get; private set; }

public int Length { get; private set; }

public NonNullImmutableList<SourceFieldLocation> SourceLocations { get; private set; }

}

}

}

The overlap-prevention is important for my application since I want to be able to take arbitrary segments of the content and wrap them in <strong> tags so that they can appear highlighted - if there are segments that overlap then this isn't going to result in valid html!

The other part of the puzzle is the "best match determiner". This also follows very closely the approach outlined:

public class BlogSearchTermBestMatchComparer : IComparer<NonNullImmutableList<SourceFieldLocation>>

{

public int Compare(

NonNullImmutableList<SourceFieldLocation> x,

NonNullImmutableList<SourceFieldLocation> y)

{

if (x == null)

throw new ArgumentNullException("x");

if (y == null)

throw new ArgumentNullException("y");

var combinedWeightComparisonResult =

y.Sum(s => s.MatchWeightContribution)

.CompareTo(x.Sum(s => s.MatchWeightContribution));

if (combinedWeightComparisonResult != 0)

return combinedWeightComparisonResult;

var numberOfTokensComparisonResult = x.Count.CompareTo(y.Count);

if (numberOfTokensComparisonResult != 0)

return numberOfTokensComparisonResult;

return x.Min(s => s.SourceIndex).CompareTo(y.Min(s => s.SourceIndex));

}

}

Ok, there's actually one more thing. Since I currently use the GetPartialMatches method to deal with multi-word searches on my blog, I have a NonNullImmutableList<SourceFieldLocationWithTerm> rather than a NonNullImmutableList<SourceFieldLocation> so I have this alternate method signature:

public static NonNullImmutableList<StringSegment> IdentifySearchTermsToHighlight(

string content,

int maxLengthForHighlightedContent,