Using Roslyn code fixes to make the "Friction-less immutable objects in Bridge" even easier

This is going to be a short post about a Roslyn (or "The .NET Compiler Platform", if you're from Microsoft) analyser and code fix that I've added to a library. I'm not going to try to take you through the steps required to create an analyser nor how the Roslyn object model describes the code that you've written in the IDE* but I want to talk about the analyser itself because it's going to be very useful if you're one of the few people using my ProductiveRage.Immutable library. Also, I feel like the inclusion of analysers with libraries is something that's going to become increasingly common (and I want to be able to have something to refer back to if I get the chance to say "told you!" in the future).

* (This is largely because I'm still struggling with it a bit myself; my current process is to start with Use Roslyn to Write a Live Code Analyzer for Your API and the "Analyzer with Code Fix (NuGet + VSIX)" Visual Studio template. I then tinker around a bit and try running what I've got so far, so that I can use the "Syntax Visualizer" in the Visual Studio instance that is being debugged. Then I tend to do a lot of Google searches when I feel like I'm getting close to something useful.. how do I tell if a FieldDeclarationSyntax is for a readonly field or not? Oh, good, someone else has already written some code doing something like what I want to do - I look at the "Modifiers" property on the FieldDeclarationSyntax instance).

As new .net libraries get written, some of them will have guidelines and rules that can't easily be described through the type system. In the past, the only option for such rules would be to try to ensure that the documentation (whether this be the project README and / or more in-depth online docs and / or the xml summary comment documentation for the types, methods, properties and fields that intellisense can bring to your attention in the IDE). The support that Visual Studio 2015 introduced for customs analysers* allows these rules to be communicated in a different manner.

* (I'm being English and stubborn, hence my use of "analysers" rather than "analyzers")

In short, they allow these library-specific guidelines and rules to be highlighted in the Visual Studio Error List, just like any error or warning raised by Visual Studio itself (even refusing to allow the project to be built, if an error-level message is recorded).

An excellent example that I've seen recently was encountered when I was writing some of my own analyser code. To do this, you can start with the "Analyzer with Code Fix (NuGet + VSIX)" template, which pulls in a range of NuGet packages and includes some template code of its own. You then need to write a class that is derived from DiagnosticAnalyzer. Your class will declare one of more DiagnosticDescriptor instances - each will be a particular rule that is checked. You then override an "Initialize" method, which allows your code to register for syntax changes and to raise any rules that have been broken. You must also override a "SupportedDiagnostics" property and return the set of DiagnosticDescriptor instances (ie. rules) that your analyser will cover. If the code that the "Initialize" method hooks up tries to raise a rule that "SupportedDiagnostics" did not declare, the rule will be ignored by the analysis engine. This would be a kind of (silent) runtime failure and it's something that is documented - but it's still a very easy mistake to make; you might create a new DiagnosticDescriptor instance and raise it from your "Initialize" method but forget to add it to the "SupportedDiagnostics" set.. whoops. In the past, you may not realise until runtime that you'd made a mistake and, as a silent failure, you might end up getting very frustrated and be stuck wondering what had gone wrong. But, mercifully (and I say this as I made this very mistake), there is an analyser in the "Microsoft.CodeAnalysis.CSharp" NuGet package that brings this error immediately to your attention with the message:

RS1005 ReportDiagnostic invoked with an unsupported DiagnosticDescriptor

The entry in the Error List links straight to the code that called "context.ReportDiagnostic" with the unexpected rule. This is fantastic - instead of suffering a runtime failure, you are informed at compile time precisely what the problem is. Compile time is always better than run time (for many reasons - it's more immediate, so you don't have to wait until runtime, and it's more thorough; a runtime failure may only happen if a particular code path is followed, but static analysis such as this is like having every possible code path tested).

The analysers already in ProductiveRage.Immutable

The ProductiveRage uber-fans (who, surely exist.. yes? ..no? :D) may be thinking "doesn't the ProductiveRage.Immutable library already have some analysers built into it?"

And they would be correct, for some time now it has included a few analysers that try to prevent some simple mistakes. As a quick reminder, the premise of the library is that it will make creating immutable types in Bridge.NET easier.

Instead of writing something like this:

public sealed class EmployeeDetails

{

public EmployeeDetails(PersonId id, NameDetails name)

{

if (id == null)

throw new ArgumentNullException("id");

if (name == null)

throw new ArgumentNullException("name");

Id = id;

Name = name;

}

/// <summary>

/// This will never be null

/// </summary>

public PersonId Id { get; }

/// <summary>

/// This will never be null

/// </summary>

public NameDetails Name { get; }

public EmployeeDetails WithId(PersonId id)

{

return Id.Equals(id) ? this : return new EmployeeDetails(id, Name);

}

public EmployeeDetails WithName(NameDetails name)

{

return Name.Equals(name) ? this : return new EmployeeDetails(Id, name);

}

}

.. you can express it just as:

public sealed class EmployeeDetails : IAmImmutable

{

public EmployeeDetails(PersonId id, NameDetails name)

{

this.CtorSet(_ => _.Id, id);

this.CtorSet(_ => _.Name, name);

}

public PersonId Id { get; }

public NameDetails Name { get; }

}

The if-null-then-throw validation is encapsulated in the CtorSet call (since the library takes the view that no value should ever be null - it introduces an Optional struct so that you can identify properties that may be without a value). And it saves you from having to write "With" methods for the updates as IAmImmutable implementations may use the "With" extension method whenever you want to create a new instance with an altered property - eg.

var updatedEmployee = employee.With(_ => _.Name, newName);

The library can only work if certain conditions are met. For example, every property must have a getter and a setter - otherwise, the "CtorSet" extension method won't know how to actually set the value "under the hood" when populating the initial instance (nor would the "With" method know how to set the value on the new instance that it would create).

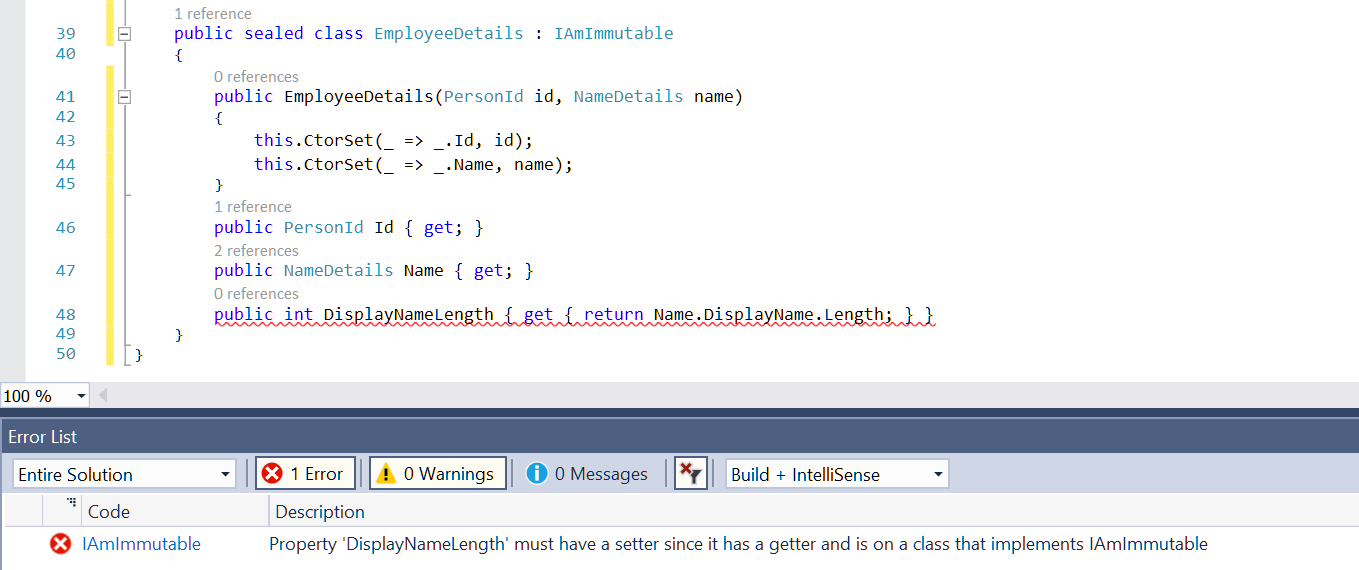

If you forgot this and wrote the following (note the "DisplayNameLength" property that is now effectively a computed value and there would be no way for us to directly set it via a "With" call) -

public sealed class EmployeeDetails : IAmImmutable

{

public EmployeeDetails(PersonId id, NameDetails name)

{

this.CtorSet(_ => _.Id, id);

this.CtorSet(_ => _.Name, name);

}

public PersonId Id { get; }

public NameDetails Name { get; }

public int DisplayNameLength { get { return Name.DisplayName.Length; } }

}

.. then you would see the following errors reported by Visual Studio (presuming you are using 2015 or later) -

.. which is one of the "common IAmImmutable mistakes" analysers identifying the problem for you.

Getting Visual Studio to write code for you, using code fixes

I've been writing more code with this library and I'm still, largely, happy with it. Making the move to assuming never-allow-null (which is baked into the "CtorSet" and "With" calls) means that the classes that I'm writing are a lot shorter and that type signatures are more descriptive. (I wrote about all this in my post at the end of last year "Friction-less immutable objects in Bridge (C# / JavaScript) applications" if you're curious for more details).

However.. I still don't really like typing out as much code for each class as I have to. Each class has to repeat the property names four times - once in the constructor, twice in the "CtorSet" call and a fourth time in the public property. Similarly, the type name has to be repeated twice - once in the constructor and once in the property.

This is better than the obvious alternative, which is to not bother with immutable types. I will gladly take the extra lines of code (and the effort required to write them) to get the additional confidence that a "stronger" type system offers - I wrote about this recently in my "Writing React with Bridge.NET - The Dan Way" posts; I think that it's really worthwhile to bake assumptions into the type system where possible. For example, the Props types of React components are assumed, by the React library, to be immutable - so having them defined as immutable types represents this requirement in the type system. If the Props types are mutable then it would be possible to write code that tries to change that data and then bad things could happen (you're doing something that library expects not to happen). If the Props types are immutable then it's not even possible to write this particular kind of bad-things-might-happen code, which is a positive thing.

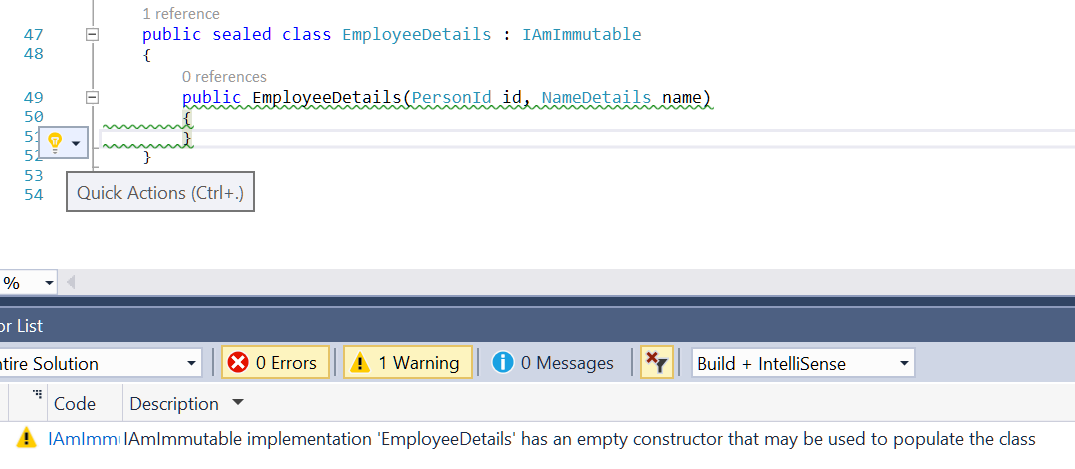

But still I get a niggling feeling that things could be better. And now they are! With Roslyn, you can not only identify particular patterns but you can also offer automatic fixes for them. So, if you were to start writing the EmployeeDetails class from scratch and got this far:

public sealed class EmployeeDetails : IAmImmutable

{

public EmployeeDetails(PersonId id, NameDetails name)

{

}

}

.. then an analyser could identify that you were writing an IAmImmutable implementation and that you have an empty constructor - it could then offer to fix that for you by filling in the rest of the class.

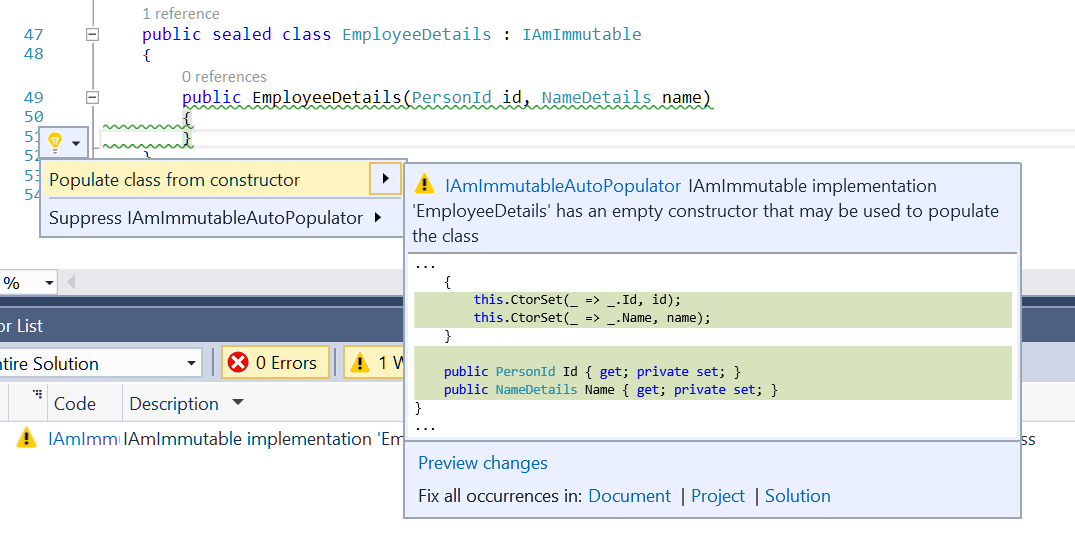

The latest version of the ProductiveRage.Immutable library (1.7.0) does just that. The empty constructor will not only be identified with a warning but a light bulb will also appear alongside the code. Clicking this (or pressing [Ctrl]-[.] while within the empty constructor, for fellow keyboard junkies) will present an option to "Populate class from constructor" -

Selecting the "Populate class from constructor" option -

.. will take the constructor arguments and generate the "CtorSet" calls and the public properties automatically. Now you can have all of the safety of the immutable type with no more typing effort than the mutable version!

// This is what you have to type of the immutable version,

// then the code fix will expand it for you

public sealed class EmployeeDetails : IAmImmutable

{

public EmployeeDetails(PersonId id, NameDetails name)

{

}

}

// This is what you would have typed if you were feeling

// lazy and creating mutable types because you couldn't

// be bothered with the typing overhead of immutability

public sealed class EmployeeDetails

{

public PersonId Id;

public NameDetails name;

}

To summarise

If you're already using the library, then all you need to do to start taking advantage of this code fix is update your NuGet reference* (presuming that you're using VS 2015 - analysers weren't supported in previous versions of Visual Studio).

* (Sometimes you have to restart Visual Studio after updating, you will know that this is the case if you get a warning in the Error List about Visual Studio not being able to load the Productive.Immutable analyser)

If you're writing your own library that has any guidelines or common gotchas that you have to describe in documentation somewhere (that the users of your library may well not read unless they have a problem - at which point they may even abandon the library, if they're only having an investigative play around with it) then I highly recommend that you consider using analysers to surface some of these assumptions and best practices. While I'm aware that I've not offered much concrete advice on how to write these analysers, the reason is that I'm still very much a beginner at it - but that puts me in a good position to be able to say that it really is fairly easy if you read a few articles about it (such as Use Roslyn to Write a Live Code Analyzer for Your API) and then just get stuck in. With some judicious Google'ing, you'll be making progress in no time!

I guess that only time will tell whether library-specific analysers become as prevalent as I imagine. It's very possible that I'm biased because I'm such a believer in static analysis. Let's wait and see*!

* Unless YOU are a library writer that this might apply to - in which case, make it happen rather than just sitting back to see what MIGHT happen! :)

Posted at 22:33

Easy "PureComponent" React performance boosts for Bridge.Net

React's great strength is that it makes creating UIs simple(r) because you can treat the view as a pure function - often, you essentially give a props reference into a top level component and it works out what to draw. Then, when something changes, you do the same again; trigger a full re-draw and rely upon React's Virtual DOM to work out what changed in an efficient manner and apply those changes to the browser DOM. The browser DOM is slow, which is why interactions with it should be minimised. The Virtual DOM is fast.

The common pre-React way to deal with UIs was to have some code to render the UI in an initial state and then further code that would change the UI based upon user interactions. React reduces these two types of state-handling (initial-display and update-for-specific-interaction) into one (full re-render).

And a lot of the time, the fast Virtual DOM performs quickly enough that you don't have to worry about what it's doing. But sometimes, you may have a UI that is so complicated that it's a lot of work for the Virtual DOM to calculate the diffs to apply to the browser DOM. Or you might have particularly demanding performance requirements, such as achieving 60 fps animations on mobile.

Handily, React has a way for you to give it hints - namely the ShouldComponentUpdate method that components may implement. This method can look at the component's current props and state values and the next props and state values and let React know if any changes are required. The method returns a boolean - false meaning "no, I don't need to redraw, this data looks the same" and true meaning "yes, I need to redraw for this new data". The method is optional, if a component doesn't implement it then it's equivalent to it always returning true. Remember, if a component returns true for "do I need to be redrawn?", the Virtual DOM is still what is responsible for dealing with the update - and it usually deals with it in a very fast and efficient manner. Returning true is not something to necessarily be worried about. However, if you can identify cases where ShouldComponentUpdate can return false then you can save the Virtual DOM from working out whether that component or any of its child components need to be redrawn. If this can be done high up in a deeply-nested component tree then it could save the Virtual DOM a lot of work.

The problem is, though, that coming up with a mechanism to reliably and efficiently compare object references (ie. props and / or state) to determine whether they describe the same data is difficult to do in the general case.

Let me paint a picture by describing a very simple example React application..

The Message Editor Example

Imagine an app that can read a list of messages from an API and allow the user of the app to edit these messages. Each message has "Content" and "Author" properties that are strings. Either of these values may be edited in the app. These messages are part of a message group that has a title - this also may be edited in the app.

(I didn't say that it was a useful or realistic app, it's just one to illustrate a point :)

The way that I like to create React apps is to categorise components as one of two things; a "Container Component" or a "Presentation Component". Presentation Components should be state-less, they should just be handed a props reference and then go off and draw themselves. Any interactions that the user makes with this component or any of its child components are effectively passed up (via change handlers on the props reference) until it reaches a Container Component. The Container Component will translate these interaction into actions to send to the Dispatcher. Actions will be handled by a store (that will be listening out for Dispatcher actions that it's interested in). When a store handles an action, it emits a change event. The Container Component will be listening out for change events on stores that it is interested in - when this happens, the Container Component will trigger a re-render of itself by updating its state based upon data now available in the store(s) it cares about. This is a fairly standard Flux architecture and, I believe, the terms "Container Component" / "Presentation Component are in reasonably common use (I didn't make them up, I just like the principle - one of the articles that I've read that uses these descriptions is Component Brick and Mortar: The React documentation I wish I had a year ago).

So, for my example app, I might have a component hierarchy that looks this:

AppContainer

Title

TextInput

Input

MessageList

MessageRow

TextInput

Input

TextInput

Input

MessageRow

TextInput

Input

TextInput

Input

There will be as many "MessageRow" components as there are messages to edit. Input is a standard React-rendered element and all of the others (AppContainer, Title, MessageList, MessageRow and TextInput) are custom components.

(Note: This is not a sufficiently deeply-nested hierarchy that React would have any problems with rendering performance, it's intended to be just complicated enough to demonstrate the point that I'm working up to).

The AppContainer is the only "Container Component" and so is the only component that has a React state reference as well as props. A state reference is, essentially, what prevents a component from being what you might consider a "pure function" - where the props that are passed in are all that affects what is rendered out. React "state" is required to trigger a re-draw of the UI, but it should be present in as few places as possible - ie. there should only be one, or a small number of, top level component(s) that have state. Components that render only according to their props data are much easier to reason about (and hence easier to write, extend and maintain).

My Bridge.NET React bindings NuGet package makes it simple to differentiate between stateful (ie. Container) components and stateless (ie. Presentation) components as it has both a Component<TProps, TState> base class and a StatelessComponent<TProps> base class - you derive from the appropriate one when you create custom components (for more details, see React (and Flux) with Bridge.net - Redux).

To start with the simplest example, below is the TextInput component. This just renders a text Input with a specified value and communicates up any requests to change that string value via an "OnChange" callback -

public class TextInput : StatelessComponent<TextInput.Props>

{

public TextInput(Props props) : base(props) { }

public override ReactElement Render()

{

return DOM.Input(new InputAttributes

{

Type = InputType.Text,

Value = props.Content,

OnChange = OnTextChange

});

}

private void OnTextChange(FormEvent<InputElement> e)

{

props.OnChange(e.CurrentTarget.Value);

}

public class Props

{

public string Content { get; set; }

public Action<string> OnChange { get; set; }

}

}

It is fairly easy to envisage how you might try to implement "ShouldComponentUpdate" here - given a "this is the new props value" reference (which gets passed into ShouldComponentUpdate as an argument called "nextProps") and the current props reference, you need only look at the "Content" and "OnChange" references on the current and next props and, if both Content/Content and OnChange/OnChange references are the same, then we can return false (meaning "no, we do not need to re-draw this TextInput").

(Two things to note here: Firstly, it is not usually possible to directly compare the current props reference with the "nextProps" reference because it is common for the parent component to create a new props instance for each proposed re-render of a child component, rather than re-use a previous props instance - so the individual property values within the props references may all be consistent between the current props and nextProps, but the actual props references will usually be distinct. Secondly, the Bridge.NET React bindings only support React component life cycle method implementations on custom components derived from Component<TProps, TState> classes and not those derived from StatelessComponent<TProps>, so you couldn't actually write your own "ShouldComponentUpdate" for a StatelessComponent - but that's not important here, we're just working through a thought experiment).

Now let's move on to the MessageList and MessageRow components, since things get more complicated there -

public class MessageList : StatelessComponent<MessageList.Props>

{

public MessageList(Props props) : base(props) { }

public override ReactElement Render()

{

var messageRows = props.IdsAndMessages

.Select(idAndMessage => new MessageRow(new MessageRow.Props

{

Key = idAndMessage.Item1,

Message = idAndMessage.Item2,

OnChange = newMessage => props.OnChange(idAndMessage.Item1, newMessage)

}));

return DOM.Div(

new Attributes { ClassName = "message-list" },

messageRows

);

}

public class Props

{

public Tuple<int, MessageEditState>[] IdsAndMessages;

public Action<int, MessageEditState> OnChange;

}

}

public class MessageRow : StatelessComponent<MessageRow.Props>

{

public MessageRow(Props props) : base(props) { }

public override ReactElement Render()

{

// Note that the "Key" value from the props reference does not explicitly need

// to be mentioned here, the React bindings will deal with it (it is important

// to give dynamic children components unique key values, but it is handled by

// the bindings and the React library so long as a "Key" property is present

// on the props)

// - See https://facebook.github.io/react/docs/multiple-components.html for

// more details

return DOM.Div(new Attributes { ClassName = "message-row" },

new TextInput(new TextInput.Props

{

Content = props.Message.Content,

OnChange = OnContentChange

}),

new TextInput(new TextInput.Props

{

Content = props.Message.Author,

OnChange = OnAuthorChange

})

);

}

private void OnContentChange(string newContent)

{

props.OnChange(new MessageEditState

{

Content = newContent,

Author = props.Message.Author

});

}

private void OnAuthorChange(string newAuthor)

{

props.OnChange(new MessageEditState

{

Content = props.Message.Content,

Author = newAuthor

});

}

public class Props

{

public int Key;

public MessageEditState Message;

public Action<MessageEditState> OnChange;

}

}

public class MessageEditState

{

public string Content;

public string Author;

}

If the MessageList component wanted to implement "ShouldComponentUpdate" then its job is more difficult as it has an array of message data to check. It could do one of several things - the first, and most obviously accurate, would be to perform a "deep compare" of the arrays from the current props and the "nextProps"; ensuring firstly that there are the same number of items in both and then comparing each "Content" and "Author" value in each item of the arrays. If everything matches up then the two arrays contain the same data and (so long as the "OnChange" callback hasn't changed) the component doesn't need to re-render. Avoiding re-rendering this component (and, subsequently, any of its child components) would be a big win because it accounts for a large portion of the total UI. Not re-rendering it would give the Virtual DOM much less work to do. But would a deep comparison of this type actually be any cheaper than letting the Virtual DOM do what it's designed to do?

The second option is to presume that whoever created the props references would have re-used any MessageEditState instances that haven't changed. So the array comparison could be reduced to ensuring that the current and next props references both have the same number of elements and then performing reference equality checks on each item.

The third option is to presume that whoever created the props reference would have re-used the array itself if the data hadn't changed, meaning that a simple reference equality check could be performed on the current and next props' arrays.

The second and third options are both much cheaper than a full "deep compare" but they both rely upon the caller following some conventions. This is why I say that this is a difficult problem to solve for the general case.

Immutability to the rescue

There is actually another option to consider, the object models for the props data could be rewritten to use immutable types. These have the advantage that if you find that two references are equal then they are guaranteed to contain the same data. They also have the advantage that it's much more common to re-use instances to describe the same data - partly because there is some overhead to initialising immutable types and partly because there is no fear that "if I give this reference to this function, I want to be sure that it can't change the data in my reference while doing its work" because it is impossible to change an immutable reference's data. (I've seen defensively-written code that clones mutable references that it passes into other functions, to be sure that no other code can change the data in the original reference - this is never required with immutable types).

Conveniently, I've recently written a library to use with Bridge.NET which I think makes creating and working with immutable types easier than C# makes it on its own. I wrote about it in "Friction-less immutable objects in Bridge (C# / JavaScript) applications" but the gist is that you re-write MessageEditState as:

// You need to pull in the "ProductiveRage.Immutable" NuGet package to use IAmImmutable

public class MessageEditState : IAmImmutable

{

public MessageEditState(string content, string author)

{

this.CtorSet(_ => _.Content, content);

this.CtorSet(_ => _.Author, author);

}

public string Content { get; private set; }

public string Author { get; private set; }

}

It's still a little more verbose than the mutable version, admittedly, but I'm hoping to convince you that it's worth it (if you need convincing!) for the benefits that we'll get.

When you have an instance of this new MessageEditState class, if you need to change one of the properties, you don't have to call the constructor each time to get a new instance, you can use the "With" extension methods that may be called on any IAmImmutable instance - eg.

var updatedMessage = message.With(_ => _.Content, "New information");

This would mean that the change handlers from MessageRow could be altered from:

private void OnContentChange(string newContent)

{

props.OnChange(new MessageEditState

{

Content = newContent,

Author = props.Message.Author

});

}

private void OnAuthorChange(string newAuthor)

{

props.OnChange(new MessageEditState

{

Content = props.Message.Content,

Author = newAuthor

});

}

and replaced with:

private void OnContentChange(string newContent)

{

props.OnChange(props.Message.With(_ => _.Content, newContent));

}

private void OnAuthorChange(string newAuthor)

{

props.OnChange(props.Message.With(_ => _.Author, newAuthor));

}

Immediately, the verbosity added to MessageEditState is being offset with tidier code! (And it's nice not having to set both "Content" and "Author" when only changing one of them).

The "With" method also has a small trick up its sleeve in that it won't return a new instance if the new property value is the same as the old property value. This is an eventuality that could happen in the code above as an "Input" element rendered by React will raise an "OnChange" event for any action that might have altered the text input's content. For example, if you had a text box with the value "Hello" in it and you selected all of that text and then pasted in text from the clipboard over the top of it, if the clipboard text was also "Hello" then the "OnChange" event will be raised, even though the actual value has not changed (it was "Hello" before and it's still "Hello" now). The "With" method will deal with this, though, and just pass the same instance straight back out. This is an illustration of the "reuse of instances for unchanged data" theme that I alluded to above.

The next step would be to change the array type in the MessageList.Props type from

public Tuple<int, MessageEditState>[] IdsAndMessages;

to

public NonNullList<Tuple<int, MessageEditState>> IdsAndMessages;

The NonNullList class is also in the ProductiveRage.Immutable NuGet package. It's basically an immutable IEnumerable that may be used in Bridge.NET projects. A simple example of it in use is:

// Create a new set of values (the static "Of" method uses type inference to determine

// the type of "T" in the returned "NonNullList<T>" - since 1, 2 and 3 are all ints, the

// "numbers" reference will be of type "NonNullList<int>")

var numbers = NonNullList.Of(1, 2, 3);

// SetValue takes an index and a new value, so calling SetValue(2, 4) on a set

// containing 1, 2, 3 will return a new set containing the values 1, 2, 4

numbers = numbers.SetValue(2, 4);

// Calling SetValue(2, 4) on a set containing values 1, 2, 4 does not require any

// changes, so the input reference is passed straight back out

numbers = numbers.SetValue(2, 4);

As with IAmImmutable instances we get two big benefits - we can rely on reference equality comparisons more often, since the data with any given reference can never change, and references will be reused in many cases if operations are requested that would not actually change the data. (It's worth noting that the guarantees fall apart if any property on an IAmImmutable reference is a of a mutable type, similarly if a NonNullList has elements that are a mutable type, or that have nested properties that are of a mutable type.. but so long as immutability is used "all the way down" then all will be well).

If this philosophy was followed, then suddenly the "ShouldComponentUpdate" implementation for the MessageList component would be very easy to write - just perform reference equality comparisons on the "IdsAndMessages" and "OnChange" values on the current props and on the nextProps. While solving the problem for the general case is very difficult, solving it when you introduce some constraints (such as the use of immutable and persistent data types) can be very easy!

If we did implement this MessageList "ShouldComponentUpdate" method, then we could be confident that when a user makes changes to the "Title" text input that the Virtual DOM would not have to work out whether the MessageList or any of its child components had changed - because we'd have told the Virtual DOM that they hadn't (because the "IdsAndMessages" and "OnChange" property references wouldn't have changed).

We could take this a step further, though, and consider the idea of implementing "ShouldComponentUpdate" on other components - such as MessageRow. If the user edits a text value within one row, then the MessageList will have to perform some re-rendering work, since one of its child components needs to be re-rendered. But there's no need for any of the other rows to re-render, it could be just the single row in which the change was requested by the user.

So the MessageRow could look at its props values and, if they haven't changed between the current props and the nextProps, then inform React (via "ShouldComponentUpdate") that no re-render is required.

And why not go even further and just do this on all Presentation Components? The TextInput could avoid the re-render of its child Input if the props' "Content" and "OnChange" reference are not being updated.

Introducing the Bridge.React "PureComponent"

To make this easy, I've added a new base class to the React bindings (available in 1.4 of Bridge.React); the PureComponent<TProps>.

This, like the StatelessComponent<TProps>, is very simple and does not support state and only allows the "Render" method to be implemented - no other React lifecycle functions (such "ComponentWillMount", "ShouldComponentUpdate", etc..) may be defined on components deriving from this class.

The key difference is that it has its own "ShouldComponentUpdate" implementation that presumes that the props data is immutable and basically does what I've been describing above automatically - when React checks "ShouldComponentUpdate", it will look at the "props" and "nextProps" instances and compare their property values. (It also deals with the cases where one or both of them are null, in case you want components whose props reference is optional).

This is not an original idea, by a long shot. I first became aware of people doing this in 2013 when I read The Future of JavaScript MVC Frameworks, which was talking about using ClojureScript and its React interface "Om". More recently, I was reading Performance Engineering with React (Part 1), which talks about roughly the same subject but with vanilla JavaScript. And, of course, Facebook has long had its PureRenderMixin - though mixins can't be used with ES6 components (which seems to be the approach to writing components that Facebook is pushing at the moment).

So, this is largely just making it easy it when writing React applications with Bridge. However, using Bridge to do this does give us some extra advantages (on top of the joy of being able to write React apps in C#!). In the code earlier (from the MessageRow Render method) -

new TextInput(new TextInput.Props

{

Content = props.Message.Content,

OnChange = OnContentChange

})

Bridge will bind the "OnContentChange" method to the current MessageRow instance so that when it is called by the TextInput's "OnChange" event, "this" is the MessageRow and not the TextInput (which is important because OnContentChange needs to access the "props" reference scoped to the MessageRow).

This introduces a potential wrinkle in our plan, though, as this binding process creates a new JavaScript method each time and means that each time the TextInput is rendered, the "OnChange" reference is new. So if we try to perform simple reference equality checks on props values, then we won't find the current "OnChange" and the new "OnChange" to be the same.

This problem is mentioned in the "Performance Engineering" article I linked above:

Unfortunately, each call to Function.bind produces a new function.. No amount of prop checking will help, and your component will always re-render.

..

The simplest solution we've found is to pass the unbound function.

When using Bridge, we don't have the option of using an unbound function since the function-binding is automatically introduced by the C#-to-JavaScript translation process. And it's very convenient, so it's not something that I'd ideally like to have to workaround.

Having a dig through Bridge's source code, though, revealed some useful information. When Bridge.fn.bind is called, it returns a new function (as just discussed).. but with some metadata attached to it. When it returns a new function, it sets two properties on it "$scope" and "$method". The $scope reference is what "this" will be set to when the bound function is called and the $method reference is the original function that is being bound. This means that, when the props value comparisons are performed, if a value is a function and it the reference equality comparison fails, a fallback approach may be attempted - if both functions have $scope and $method references defined then compare them and, if they are both consistent between the function value on the current props and the function value on the nextProps, then consider the value to be unchanged.

The PureComponent's "ShouldComponentUpdate" implementation deals with this automatically, so you don't have to worry about it.

It's possibly worth noting that the "Performance Engineering" post did briefly consider something similar -

Another possibility we've explored is using a custom bind function that stores metadata on the function itself, which in combination with a more advanced check function, could detect bound functions that haven't actually changed.

Considering that Bridge automatically includes this additional metadata, it seemed to me to be sensible to use it.

There's one other equality comparison that is supported; as well as simple referential equality and the function equality gymnastics described above, if both of the values are non-null and the first has an "Equals" function then this function will be considered. This means that any custom "Equals" implementations that you define on classes will be automatically taken into consideration by the PureComponent's logic.

Another Bridge.NET bonus: Lambda support

When I started writing this post, there was going to be a section here with a warning about using lambdas as functions in props instances, rather than using named functions (which the examples thus far have done).

As with bound functions, anywhere that an anonymous function is present in JavaScript, it will result in a new function value being created. If, for example, we change the MessageRow class from:

public class MessageRow : PureComponent<MessageRow.Props>

{

public MessageRow(Props props) : base(props) { }

public override ReactElement Render()

{

return DOM.Div(new Attributes { ClassName = "message-row" },

new TextInput(new TextInput.Props

{

Content = props.Message.Content,

OnChange = OnContentChange

}),

new TextInput(new TextInput.Props

{

Content = props.Message.Author,

OnChange = OnAuthorChange

})

);

}

private void OnContentChange(string newContent)

{

props.OnChange(props.Message.With(_ => _.Content, newContent));

}

private void OnAuthorChange(string newAuthor)

{

props.OnChange(props.Message.With(_ => _.Author, newAuthor));

}

public class Props

{

public int Key;

public MessageEditState Message;

public Action<MessageEditState> OnChange;

}

}

to:

public class MessageRow : PureComponent<MessageRow.Props>

{

public MessageRow(Props props) : base(props) { }

public override ReactElement Render()

{

return DOM.Div(new Attributes { ClassName = "message-row" },

new TextInput(new TextInput.Props

{

Content = props.Message.Content,

OnChange = newContent =>

props.OnChange(props.Message.With(_ => _.Content, newContent))

}),

new TextInput(new TextInput.Props

{

Content = props.Message.Author,

OnChange = newAuthor =>

props.OnChange(props.Message.With(_ => _.Author, newAuthor))

})

);

}

public class Props

{

public int Key;

public MessageEditState Message;

public Action<MessageEditState> OnChange;

}

}

then there would be problems with the "OnChange" props values specified because each new lambda - eg..

OnChange = newContent =>

props.OnChange(props.Message.With(_ => _.Content, newContent))

would result in a new JavaScript function being passed to Bridge.fn.bind every time that it was called:

onChange: Bridge.fn.bind(this, function (newContent) {

this.getprops().onChange(

ProductiveRage.Immutable.ImmutabilityHelpers.$with(

this.getprops().message,

function (_) { return _.getContent(); },

newContent

)

);

})

And this would prevent the PureComponent's "ShouldComponentUpdate" logic from being effective, since the $method values from the current props "OnChange" and the nextProps "OnChange" bound functions would always be different.

I was quite disappointed when I realised this and was considering trying to come up with some sort of workaround - maybe calling "toString" on both $method values and comparing their implementations.. but I couldn't find definitive information about the performance implications of this and I wasn't looking forward to constructing my own suite of tests to investigate any potential performance impact of this across different browsers and different browser versions.

My disappointment was two-fold: firstly, using the lambdas allows for more succinct code and less syntactic noise - since the types of the lambda's argument(s) and return value (if any) are inferred, rather than having to be explicitly typed out.

newContent => props.OnChange(props.Message.With(_ => _.Content, newContent))

is clearly shorter than

private void OnContentChange(string newContent)

{

props.OnChange(props.Message.With(_ => _.Content, newContent));

}

The other reason that I was deflated upon realising this was that it meant that the "ShouldComponentUpdate" implementation would, essentially, silently fail for components that used lambdas - "ShouldComponentUpdate" would return true in cases where I would like it to return false. There would be no compiler error and the UI code would still function, but it wouldn't be as efficient as it could be (the Virtual DOM would have to do more work than necessary).

Instead, I had a bit of a crazy thought.. lambdas like this, that only need to access their own arguments and the "this" reference, could be "lifted" into named functions quite easily. Essentially, I'm doing this manually by writing methods such as "OnContentChange". But could the Bridge translator do something like this automatically - take those C# lambdas and convert them into named functions in JavaScript? That way, I would get the benefit of the succinct lambda format in C# and the PureComponent optimisations would work.

Well, once again the Bridge.NET Team came through for me! I raised a Feature Request about this, explained what I'd like in an ideal world (and why) and five days later there was a branch on GitHub where I could preview changes that did precisely what I wanted!

This is not just an example of fantastic support from the Bridge Team, it is also, I believe, an incredible feature for Bridge and a triumph for writing front-end code in C#! Having this "translation step" from C# to JavaScript provides the opportunity for handy features to be included for free - earlier we saw how the insertion of Bridge.fn.bind calls by the translator meant that we had access to $method and $scope metadata (which side-steps one of the problems that were had by the author of Performance Engineering with React) but, here, the translation step can remove the performance overhead that anonymous functions were going to cause for our "ShouldComponentUpdate" implementation, without there being any burden on the developer writing the C# code.

It's also worth considering the fact that every allocation made in JavaScript is a reference that needs to be tidied up by the browser's garbage collector at some point. A big reason why judicious use of "ShouldComponentUpdate" can make UIs faster is that there is less work for the Virtual DOM to do, but it also eases the load on the garbage collector because none of the memory allocations need to be made for child components of components that do not need to be re-rendered. Since anonymous JavaScript functions are created over and over again (every time that the section of code that declares the anonymous function is executed), lifting them into named functions means that there will be fewer allocations in your SPA and hence even less work for the garbage collector to do.

Note: As of the 11th of February 2016, this Bridge.NET improvement has not yet been made live - but their release cycles tend to be fairly short and so I don't imagine that it will be very long until it is included in an official release. If you were desperate to write any code with PureComponent before then, you could either avoid lambdas in your C# code or you could use lambdas now, knowing that the PureComponent won't be giving you the full benefit immediately - but that you WILL get the full benefit when the Bridge Team release the update.

So it's an unequivocable success then??

Well, until it transpired that the Bridge translator would be altered to convert these sorts of lambdas into named functions, I was going to say "this is good, but..". However, with that change in sight, I'm just going to say outright "yes, and I'm going to change all classes that derive from StatelessComponent in my projects to derive from PureComponent". This will work fine, so long as your props references are all immutable (meaning that they are immutable all the way down - you shouldn't have, say, a props property that is an immutable NonNullList of references, but where those references have mutable properties).

And, if you're not using immutable props types - sort yourself out! While a component is being rendered (according to the Facebook React Tutorial):

props are immutable: they are passed from the parent and are "owned" by the parent

So, rather than having props only be immutable during component renders (by a convention that the React library enforces), why not go whole-hog and use fully immutable classes to describe your props types - that way props are fully immutable and you can use the Bridge.React's PureComponent to get performance boosts for free!

(Now seems like a good time to remind you of my post "Friction-less immutable objects in Bridge (C# / JavaScript) applications", which illustrates how to use the ProductiveRage.Immutable NuGet package to make defining immutable classes just that bit easier).

Posted at 20:11

Friction-less immutable objects in Bridge (C# / JavaScript) applications

One of the posts that I've written that got the most "audience participation*" was one from last year "Implementing F#-inspired 'with' updates for immutable classes in C#", where I spoke about trying to ease the burden in C# or representing data with immutable classes by reducing the repetitive typing involved.

* (I'd mis-remembered receiving more criticism about this than I actually did - now that I looking back at the comments left on the post and on reddit, the conversations and observations are pretty interesting and largely constructive!)

The gist was that if I wanted to have a class that represented, for the sake of a convoluted example, an employee that had a name, a start-of-employment date and some notes (which are optional and so may not be populated) then we might have something like the following:

public class EmployeeDetails

{

public EmployeeDetails(string name, DateTime startDate, string notesIfAny)

{

if (string.IsNullOrWhiteSpace(name))

throw new ArgumentException("name");

Name = name.Trim();

StartDate = startDate;

NotesIfAny = (notesIfAny == null) ? null : notesIfAny.Trim();

}

/// <summary>

/// This will never be null or blank, it will not have any leading or trailing whitespace

/// </summary>

public string Name { get; private set; }

public DateTime StartDate { get; private set; }

/// <summary>

/// This will be null if it has not value, otherwise it will be a non-blank string with no

/// leading or trailing whitespace

/// </summary>

public string NotesIfAny { get; private set; }

}

If we wanted to update a record with some notes where previously it had none then we'd need to create a new instance, something like:

var updatedEmployee = new EmployeeDetails(

employee.Name,

employee.StartDate,

"Awesome attitude!"

);

This sort of thing (calling the constructor explicitly) gets old quickly, particularly if the class gets extended in the future since then anywhere that did something like this would have to add more arguments to the constructor call.

So an alternative is to include "With" functions in the class -

public class EmployeeDetails

{

public EmployeeDetails(string name, DateTime startDate, string notesIfAny)

{

if (string.IsNullOrWhiteSpace(name))

throw new ArgumentException("name");

Name = name.Trim();

StartDate = startDate;

NotesIfAny = (notesIfAny == null) ? null : notesIfAny.Trim();

}

/// <summary>

/// This will never be null or blank, it will not have any leading or trailing whitespace

/// </summary>

public string Name { get; private set; }

public DateTime StartDate { get; private set; }

/// <summary>

/// This will be null if it has not value, otherwise it will be a non-blank string with no

/// leading or trailing whitespace

/// </summary>

public string NotesIfAny { get; private set; }

public EmployeeDetails WithName(string value)

{

return (value == Name) ? this : new EmployeeDetails(value, StartDate, NotesIfAny);

}

public EmployeeDetails WithStartDate(DateTime value)

{

return (value == StartDate) ? this : new EmployeeDetails(Name, value, NotesIfAny);

}

public EmployeeDetails WithNotesIfAny(string value)

{

return (value == NotesIfAny) ? this : new EmployeeDetails(Name, StartDate, value);

}

}

Now the update code is more succinct -

var updatedEmployee = employee.WithNotesIfAny("Awesome attitude!");

Another benefit of this approach is that the With functions can include a quick check to ensure that the new value is not the same as the current value - if it is then there's no need to generate a new instance, the current instance can be returned straight back out. This saves generating a new object reference and it makes it easier to rely upon simple reference equality tests when determining whether data has changed - eg.

var updatedEmployee = employee.WithNotesIfAny("Awesome attitude!");

var didEmployeeAlreadyHaveAwesomeAttitude = (updatedEmployee == employee);

Last year's post went off on a few wild tangents but basically was about allowing something like the following to be written:

public class EmployeeDetails

{

public EmployeeDetails(string name, DateTime startDate, string notesIfAny)

{

if (string.IsNullOrWhiteSpace(name))

throw new ArgumentException("name");

Name = name.Trim();

StartDate = startDate;

NotesIfAny = (notesIfAny == null) ? null : notesIfAny.Trim();

}

/// <summary>

/// This will never be null or blank, it will not have any leading or trailing whitespace

/// </summary>

public string Name { get; private set; }

public DateTime StartDate { get; private set; }

/// <summary>

/// This will be null if it has not value, otherwise it will be a non-blank string with no

/// leading or trailing whitespace

/// </summary>

public string NotesIfAny { get; private set; }

public EmployeeDetails With(

Optional<string> name = new Optional<string>(),

Optional<DateTime> startDate = new Optional<DateTime>(),

Optional<Optional<string>> notesIfAny = new Optional<Optional<string>>())

{

return DefaultUpdateWithHelper.GetGenerator<EmployeeDetails>()(

this, name, startDate, notesIfAny

);

}

}

It allowed you to include a single "With" function that could change one or more of the properties in a single call like this:

var updatedEmployee = employee.With(name: "Jimbo", notesIfAny: "So lazy!");

And it would do it with magic, so you wouldn't have to write all of the "return-same-instance-if-no-values-changed" logic and it would.. erm.. well, to be honest, I've forgotten some of the finer details! But I remember that it was fun messing around with, getting my hands dirty with reflection, compiled LINQ expressions, stack-trace-sniffing (and some required JIT-method-inlining-disabling).

A couple of weeks later I wrote a follow-up, taking on board some of the feedback and criticisms in the comments and doing some performance testing. One of the ways I came up with to create the "magic With method" was only twice as slow as writing it by hand.. which, now, doesn't sound all that awesome - twice as slow is often a bad thing, but I was quite proud of it at the time!

Immutable Classes in 2015

Recently, I've been making Bridge.NET applications and I've been favouring writing immutable types for the messages passed around the system. And, again, I've gotten a bit bored of writing the same lines of code over and over -

if (value == null)

throw new ArgumentNullException("value");

and

/// <summary>

/// This will never be null

/// </summary>

and

/// <summary>

/// This will never be null, nor blank, nor have any leading or trailing whitespace

/// </summary>

Never mind contemplating writing all of those "WithName", "WithStartDate" methods (checking in them that the values have actually changed and returning the same instance back out if not). I love the benefits of having these immutable types (reducing places where it's possible for state to change makes reasoning about code soooooooo much easier) but I'm getting tired of banging out the same constructs and sentences! follows a

So I've started on a new tack. I want to find those places where repetition is getting me down and I want to reduce it as much as possible. But I don't want to sacrifice my validation checks or the guarantees of immutability. And, again, to put this into context - I'm going to be concentrating on the classes that I write in C# but that Bridge.NET then translates into JavaScript, so there are different considerations to take into account. First of which being that Bridge doesn't support reflection and so none of the crazy stuff I was doing in "pure" C# will be possible! Not in the same way that I wrote it last time, at least..

Before I get into any silly stuff, though, I want to talk about a couple of techniques that hopefully aren't too controversial and that I think have improved my code as well as requiring me to type less.

First off is a variation of the "Optional" struct that I used in my C# library last time. Previously, as illustrated in the code at the top of this post, I was relying on argument names and comments to indicate when values may and may not be null. The "Name" property has a comment saying that it will not be null while the "NotesIfAny" property has a comment saying that it might be null - and it follows a convention of having an "IfAny" suffix, which suggests that it might not always have a value.

Instead, I want to move to assuming that all references are non-null and that values that may be null have their type wrapped in an Optional struct.

This would change the EmployeeDetails example to look like this:

public class EmployeeDetails

{

public EmployeeDetails(string name, DateTime startDate, Optional<string> notes)

{

if (string.IsNullOrWhiteSpace(name))

throw new ArgumentException("name");

Name = name.Trim();

StartDate = startDate;

Notes = !notes.IsDefined || (notes.Value.Trim() == "") ? null : notes.Value.Trim();

}

public string Name { get; private set; }

public DateTime StartDate { get; private set; }

public Optional<string> Notes { get; private set; }

}

The "IfAny" suffix is gone, along with all of the comments about null / non-null. Now the type system indicates whether a value may be null (in which case it will be wrapped in an Optional) or not.

(Note: I'll talk more about Optional later in this post - there's nothing too revolutionary or surprising in there, but it will distract from what I'm trying to build up to here).

We have lost something, though, because in the earlier code the Name and Notes fields had comments that stated that the values (if non-null, in the case of Notes) would not be blank and would not have any leading or trailing whitespace. This information is no longer included in comments, because I want to lose the comments. But, if I've solved the null / non-null problem by leveraging the type system, why not do the same with the non-blank-trimmed strings?

Introducing..

public class NonBlankTrimmedString

{

public NonBlankTrimmedString(string value)

{

if (string.IsNullOrWhiteSpace(value))

throw new ArgumentException("Must be non-null and have some non-whitespace content");

Value = value.Trim();

}

/// <summary>

/// This will never have any leading or trailing whitespace, it will never be blank

/// </summary>

public string Value { get; private set; }

public static implicit operator NonBlankTrimmedString(string value)

{

return new NonBlankTrimmedString(value);

}

public static implicit operator string(NonBlankTrimmedString value)

{

return value.Value;

}

}

Ok, so it looks like the comments are back.. but the idea is that the "will never have any leading or trailing whitespace, it will never be blank" need only appear once (in this class) and not for every property that should be non-null and non-blank and not-have-any-leading-or-trailing-whitespace.

Now the EmployeeDetails class can become:

public class EmployeeDetails

{

public EmployeeDetails(

NonBlankTrimmedString name,

DateTime startDate,

Optional<NonBlankTrimmedString> notes)

{

if (name == null)

throw new ArgumentNullException("name");

Name = name;

StartDate = startDate;

Notes = notes;

}

public NonBlankTrimmedString Name { get; private set; }

public DateTime StartDate { get; private set; }

public Optional<NonBlankTrimmedString> Notes { get; private set; }

}

This looks a lot better. Not only is there less to read, there was less repetitive code (and comments) to write but the same information is still available for someone reading / using the code. In fact, I think that it's better on that front now because the constructor signature and the property types themselves communicate this information - which makes it harder to ignore than a comment does. And the type system is the primary reason that I want to write my front-end applications in C# rather than JavaScript!

However, there are still a couple of things that I'm not happy with. Firstly, in an ideal world, the constructors would magically have if-null-then-throw conditions injected for every argument - there are no arguments that should be null now; Optional is a struct and so can never be null, while any references that could be null should be wrapped in an Optional. One way to achieve that this in regular C# is with IL rewriting but I'm not a huge fan of that because I have suspicions about PostSharp (that I should probably revisit one day because I'm no longer completely sure what grounds they're based on). But, aside from that, it would be use when writing C# for Bridge, since IL doesn't come into the process - C# source code is translated into JavaScript and IL isn't involved!

Secondly, I need to tackle the "With" function(s) and I'd like to make that as painless as possible, really. Writing them all by hand is tedious.

Get to the point, already!

So.. I've been playing around and I've written a Bridge.NET library that allows me to write something like this:

public class EmployeeDetails : IAmImmutable

{

public EmployeeDetails(

NonBlankTrimmedString name,

DateTime startDate,

Optional<NonBlankTrimmedString> notes)

{

this.CtorSet(_ => _.Name, name);

this.CtorSet(_ => _.StartDate, startDate);

this.CtorSet(_ => _.Notes, notes);

}

public NonBlankTrimmedString Name { get; private set; }

public DateTime StartDate { get; private set; }

public Optional<NonBlankTrimmedString> Notes { get; private set; }

}

Which is not too bad! Unfortunately, yes, there is some duplication still - there are three places that each of the properties are mentioned; in the constructor argument list, in the constructor body and as public properties. However, I think that this is the bare minimum number of times that they could be repeated without sacrificing any type guarantees. The constructor has to accept a typed argument list and it has to somehow map them onto properties. The properties have to repeat the types so that any one accessing those property values know what they're getting.

But let's talk about the positive things, rather than the negative (such as the fact that while the format shown above is fairly minimal, it's still marginally more complicated in appearance than a simple mutable type). Actually.. maybe we should first talk about the weird things - like what is this "CtorSet" method?

"CtorSet" is an extension method that sets a specified property on the target instance to a particular value. It has the following signature:

public static void CtorSet<T, TPropertyValue>(

this T source,

Func<T, TPropertyValue> propertyIdentifier,

TPropertyValue value)

with T : IAmImmutable

It doesn't just set it, though, it ensures that the value is not null first and throws an ArgumentNullException if it is. This allows me to avoid the repetitive and boring if-null-then-throw statements. I don't need to worry about cases where I do want to allow nulls, though, because I would use an Optional type in that case, which is a struct and so never can be null!

The method signature ensures that the type of the value is consistent with the type of the target property. If not, then the code won't compile. I always favour static type checking where possible, it means that there's no chance that a mistake you make will only reveal itself when a particular set of condition are met (ie. when a particular code path is executed) at runtime - instead the error is right in your face in the IDE, not even letting you try to run it!

Which makes the next part somewhat unfortunate. The "propertyIdentifier" must be:

- A simple lambda expression..

- .. that identifies a property getter which has a corresponding setter (though it's fine for that setter to be private)..

- .. where neither the getter nor setter have a Bridge [Name] / [Template] / [Ignore] / etc.. attribute on it..

If any of these conditions are not met then the "CtorSet" method will throw an exception. But you might not find out until runtime because C#'s type system is not strong enough to describe all of these requirements.

The good news, though, is that while the C# type system itself isn't powerful enough, with Visual Studio 2015 it's possible to write a Roslyn Analyser that can pick up any invalid propertyRetriever before run time, so that errors will be thrown right in your face without you ever executing the code. The even better news is that such an analyser is included in the NuGet package! But let's not get ahead of ourselves, let me finish describing what this new method actually does first..

If it's not apparent from looking at the example code above, "CtorSet" is doing some magic. It's doing some basic sort of reflection in JavaScript to work out how to identify and set the target property. Bridge won't support reflection until v2 but my code does an approximation where it sniffs about in the JavaScript representation of the "propertyIdentifier" and gets a hold of the setter. Once it has done the work to identify the setter for a given "T" and "propertyIdentifier" combination, it saves it away in an internal cache - while we can't control and performance-tune JavaScript in quite the same way that we can with the CLR, it doesn't mean that we should do the same potentially-complicated work over and over again if we don't need to!

Another thing to note, if you haven't already spotted it: "CtorSet" will call private setters. This has the potential to be disastrous, if it could be called without restrictions, since it could change the data on types that should be able to give the appearance of immutability (ie. classes that set their state in their constructor and have private-only setters.. the pedantic may wish to argue that classes with private setters should not be considered strictly immutable because private functions could change those property values, but it's entirely possible to have classes that have the attribute of being Observational Immutability in this manner, and that's all I'm really interested in).

So there are two fail-safes built in. Firstly, the type constraint on "CtorSet" means that the target must implement the IAmImmutable interface. This is completely empty and so there is no burden on the class that implements it, it merely exists as an identifier that the current type should be allowed to work with "CtorSet".

The second protection is that once "CtorSet" has been called for a particular target instance and a particular property, that property's value is "locked" - meaning that a subsequent call to "CtorSet" for the same instance and property will result in an exception being thrown. This prevents the situation from occurring where an EmployeeDetails is initialised using "CtorSet" in its constructor but then gets manipulated externally via further calls to "CtorSet". Since the EmployeeDetails properties are all set in its constructor using "CtorSet", no-one can change them later with another call to "CtorSet". (This is actually something else that is picked up by the analyser - "CtorSet" may only be called from within constructor - so if you're using this library within Visual Studio 2015 then you wouldn't have to worry about "CtorSet" being called from elsewhere, but if you're not using VS 2015 then this extra runtime protection may be reassuring).

Now that "CtorSet" is explained, I can get to the next good bit. I have another extension method:

public T With<T, TPropertyValue>(

this T source,

Func<T, TPropertyValue> propertyIdentifier,

TPropertyValue value)

with T : IAmImmutable

This works in a similar manner to "CtorSet" but, instead of setting a property value on the current instance, it will clone the target instance then update the property on that instance and then return that instance. Unless the new property value is the same as the current one, in which case this work will be bypassed and the current instance will be returned unaltered. As with "CtorSet", null values are not allowed and will return in an ArgumentNullException being thrown.

With this method, having specific "With" methods on classes is not required. Continuing with the EmployeeDetails class from the example above, if we have:

var employee = new EmployeeDetails(

"John Smith",

new DateTime(2014, 9, 3),

null

);

.. and we then discover that his start date was recorded incorrectly, then this instance of the record could be replaced by calling:

employee = employee.With(_ => _.StartDate, new DateTime(2014, 9, 2));

And, just to illustrate that if-value-is-the-same-return-instance-immediately logic, if we then did the following:

var employeeUpdatedAgain= employee.With(_ => _.StartDate, new DateTime(2014, 9, 2));

.. then we could use referential equality to determine whether any change was made -

// This will be false because the "With" call specified a StartDate value that was

// the same as the StartDate value that the employee reference already had

var wasAnyChangeMade = (employeeUpdatedAgain != employee);

Bonus features

So, in this library, there are the "CtorSet" and "With" extensions methods and there is an Optional type -

public struct Optional<T> : IEquatable<Optional<T>>

{

public static Optional<T> Missing { get; }

public bool IsDefined { get; }

public T Value { get { return this.value; } }

public T GetValueOrDefault(T defaultValue);

public static implicit operator Optional<T>(T value);

}

This has a convenience static function -

public static class Optional

{

public static Optional<T> For<T>(T value)

{

return value;

}

}

.. which makes it easier any time that you explicitly need to create an Optional<> wrapper for a value. It lets you take advantage of C#'s type inference to save yourself from having to write out the type name yourself. For example, instead of writing something like

DoSomething(new Optional<string>("Hello!"));

.. you could just write

DoSomething(Optional.For("Hello!"));

.. and type inference will know that the Optional's type is a string.

However, this is often unnecessary due to Optional's implicit operator from "T" to Optional<T>. If you have a function

public void DoSomething(Optional<string> value)

{

// Do.. SOMETHING

.. then you can call it with any of the following:

// The really-explicit way

DoSomething(new Optional<string>("Hello!"));

// The rely-on-type-inference way

DoSomething(Optional.For("Hello!"));

// The rely-on-Optional's-implicit-operator way

DoSomething("Hello!");

There is also an immutable collection type; the NonNullList<T>. This has a very basic interface -

public sealed class NonNullList<T> : IEnumerable<T>

{

public static NonNullList<T> Empty { get; }

public int Count { get; }

public T this[int index] { get; }

public NonNullList<T> SetValue(int index, T value);

public NonNullList<T> Insert(T item);

}

.. and it comes with a similar convenience static function -

public static class NonNullList

{

public static NonNullList<T> Of<T>(params T[] values);

}

The reason for this type is that it's so common to need collections of values but there is nothing immediately available in Bridge that allows me to do this while maintaining guarantees about non-null values and immutability.

I thought about using the Facebook Immutable-Js library but..

- It's a further dependency

- I really wanted to continue the do-not-allow-null philosophy that I use with "CtorSet" and "With"

I actually considered calling the "NonNullList" type the "NonNullImmutableList" but "NonNull" felt redundant when I was trying to encourage non-null-by-default and "Immutable" felt redundant since immutability is what this library is for. So that left my with List<T> and that's already used! So I went with simply NonNullList<T>.

Immutable lists like this are commonly written using linked lists since, if the nodes are immutable, then sections of the list can often be shared between multiple lists - so, if you have a list with three items in it and you call "Insert" to create a new list with four items that has the new item as the new first first item in the linked list then the following three items will be the same three node instances that existed in the original list. This reuse of data is a way to make immutable types more efficient than the naive copy-the-entire-list-and-then-manipulate-the-new-version approach would be. I'm 99% sure that this is what the Facebook library uses for the simple list type and it's something I wrote about doing in C# a few years ago if you want to read more (see "Persistent Immutable Lists").

The reason that I mention this is to try to explain why the NonNullList interface is so minimal - there are no Add, InsertAt, etc.. functions. The cheapest operations to do to this structure are to add a new item at the start of the list and to iterate through the items from the start, so I started off with only those facilities initially. Then I added a getter (which is an O(n) operation, rather than the O(1) that you get with a standard array) and a setter (which is similarly O(n) in cost, compared to O(1) for an array) because they are useful in many situations. In the future I might expand this class to include more List-like functions, but I haven't for now.

Just to make this point clear one more time: NonNullList<T> functions will throw exceptions if null values are ever specified - all values should be non-null and the type of "T" should be an Optional if null values are required (in which case none of the actual elements of the set will be null since they will all be Optional instances and Optional is a struct).

To make it easier to work with properties that are collections of items, there is another "With" method signature:

public T With<T, TPropertyElement>(

this T source,

Func<T, NonNullList<TPropertyElement>> propertyIdentifier,

int index,

TPropertyElement value)

So, if you had a class like this -

public class Something : IAmImmutable

{

public Something(int id, NonNullList<string> items)

{

this.CtorSet(_ => _.Id, id);

this.CtorSet(_ => _.Items, items);

}

public int Id { get; private set; }

public NonNullList<string> Items { get; private set; }

}

.. and an instance of one created with:

var s = new Something(1, NonNullList.Of("ZERO", "One"));

.. and you then wanted to change the casing of that second item, then you could do so with:

s = s.With(_ => _.Items, 1, "ONE");

If you specified an invalid index then it would fail at runtime, as it would if you tried to pass a null value. If you tried to specify a value that was of an incompatible type then you would get a compile error as the method signature ensures that the specified value matches the NonNullList<T>'s item type.

Getting hold of the library

If this has piqued your interest then you can get the library from NuGet - it's called "ProductiveRage.Immutable". It should work fine with Visual Studio 2013 but I would recommend that you use 2015, since then the analysers that are part of the NuGet package will be installed and enabled as well. The analysers confirm that every "property retriever" argument is always a simple lambda, such as

_ => _.Name

.. and ensures that "Name" is a property that both "CtorSet" and "With" are able to use in their manipulations*. If this is not the case, then you will get a descriptive error message explaining why.

* (For example, properties may not be used whose getter or setter has a Bridge [Name], [Template] or [Ignore] attribute attached to it).

One think to be aware of with using Visual Studio 2015 with Bridge.Net, though, is that Bridge does not yet support C# 6 syntax. So don't get carried away with the wonderful new capabilities (like my beloved nameof). Support for this new syntax is, I believe, coming in Bridge v2..

If you want to look at the actual code then feel free to check it out at github.com/ProductiveRage/Bridge.Immutable. That's got the library code itself as well as the analysers and the unit tests for the analysers. It's the first time that I've tried to produce a full, polished analyser and I had fun! As well as a few speed bumps.. (possibly a topic for another day).

While the library, as delivered through the NuGet package, should work fine for both VS 2013 and VS 2015, building the solution yourself requires VS 2015 Update 1.

Is this proven and battle-hardened?

No.

At this point in time, this is mostly still a concept that I wanted to try out. I think that what I've got is reliable and quite nicely rounded - I've tried to break it and haven't been able to yet. And I intend to use it in some projects that I'm working on. However, at this moment in time, you might want to consider it somewhat experimental. Or you could just be brave and starting using it all over the place to see if it fits in with your world view regarding how you should write C# :)

Is "IAmImmutable" really necessary?

If you've really been paying attention to all this, you might have noticed that I said earlier that the IAmImmutable interface is used to identify types that have been designed to work with "CtorSet", to ensure that you can't call "CtorSet" on references that weren't expecting it and whose should-be-private internals you could then meddle with. Well, it would be a reasonable question to ask:

Since there is an analyser to ensure that "CtorSet" is only called from within a constructor, surely IAmImmutable is unnecessary because it would not be possible to call "CtorSet" from places where it shouldn't be?

I have given this some thought and have decided (for now, at least) to stick with the IAmImmutable marker interface for two reasons:

- If you're writing code where the analyser is not being used (such as in Visual Studio versions before 2015) then it makes it harder to write code that could change private state where it should not be possible

- It avoids polluting the auto-complete matches by only allowing "CtorSet" and "With" to be called against any type, even where it's not applicable (such as on the string class, for example)